Replies: 1 comment

-

|

Hi @bradyhunt , Thanks for your interest and experiments. Thanks. |

Beta Was this translation helpful? Give feedback.

0 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

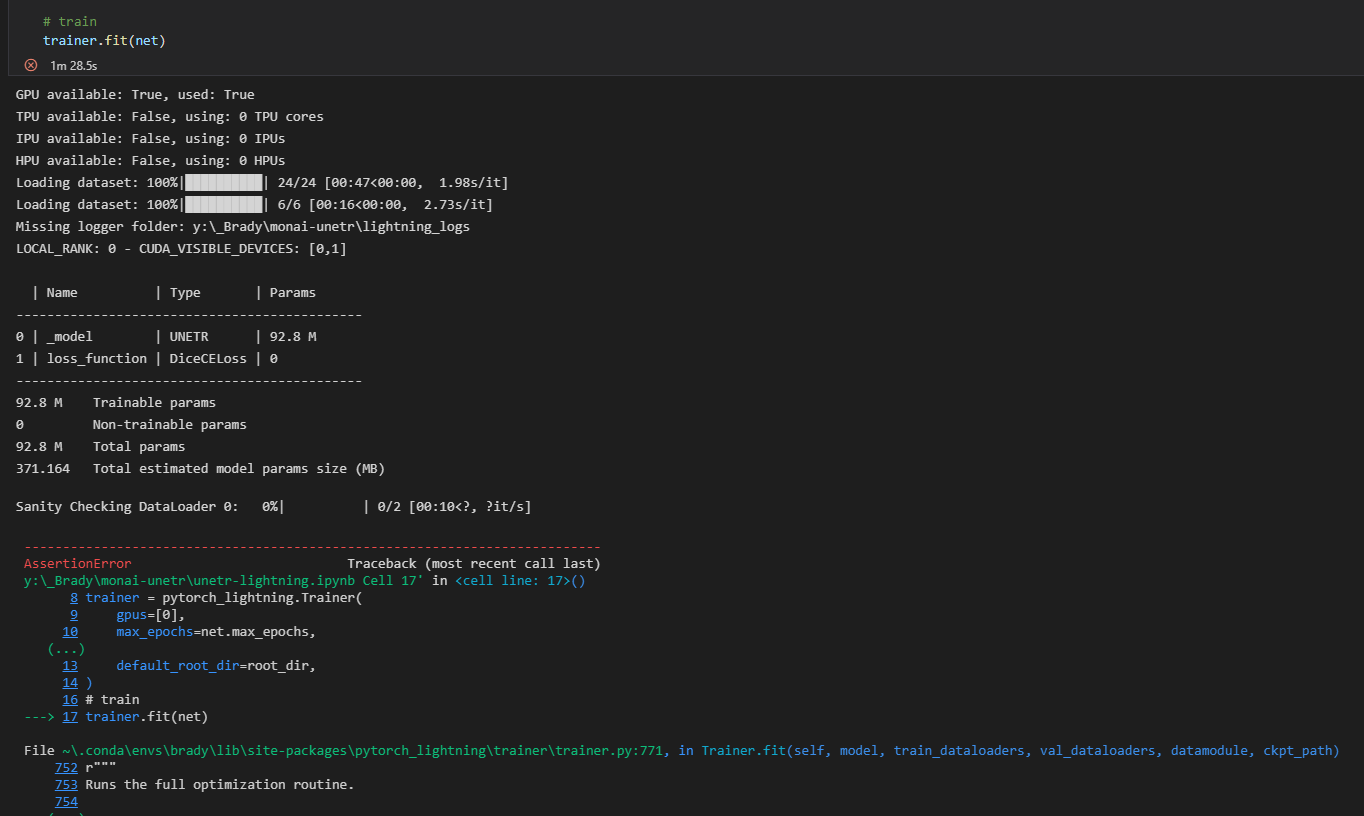

Hi @ahatamiz,

I am attempting to use the UNETR BTCV Segmentation tutorial as a starting point for a 3D segmentation project I am working on.

However, when running the tutorial I get an Assertion error and I cannot identify what is causing it. I have installed all the required dependencies and am running the version of the MONAI the tutorial recommends (monai==0.6.0) and have not modified the other function definitions in the tutorial notebook.

I am copying my error output. Hoping you can provide any insight into what could be going wrong. Thanks!

GPU available: True, used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

Loading dataset: 100%|██████████| 24/24 [00:47<00:00, 1.98s

/it]

Loading dataset: 100%|██████████| 6/6 [00:16<00:00, 2.73s/it]

Missing logger folder: y:_Brady\monai-unetr\lightning_logs

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0,1]

| Name | Type | Params

0 | _model | UNETR | 92.8 M

1 | loss_function | DiceCELoss | 0

92.8 M Trainable params

0 Non-trainable params

92.8 M Total params

371.164 Total estimated model params size (MB)

Sanity Checking DataLoader 0: 0%| | 0/2 [00:10<?, ?it/s]

AssertionError Traceback (most recent call last)

y:_Brady\monai-unetr\unetr-lightning.ipynb Cell 17' in <cell line: 17>()

8 trainer = pytorch_lightning.Trainer(

9 gpus=[0],

10 max_epochs=net.max_epochs,

(...)

13 default_root_dir=root_dir,

14 )

16 # train

---> 17 trainer.fit(net)

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:771, in Trainer.fit(self, model, train_dataloaders, val_dataloaders, datamodule, ckpt_path)

752 r"""

753 Runs the full optimization routine.

754

(...)

768 datamodule: An instance of :class:

~pytorch_lightning.core.datamodule.LightningDataModule.769 """

770 self.strategy.model = model

--> 771 self._call_and_handle_interrupt(

772 self._fit_impl, model, train_dataloaders, val_dataloaders, datamodule, ckpt_path

773 )

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:724, in Trainer._call_and_handle_interrupt(self, trainer_fn, *args, **kwargs)

722 return self.strategy.launcher.launch(trainer_fn, *args, trainer=self, **kwargs)

723 else:

--> 724 return trainer_fn(*args, **kwargs)

725 # TODO: treat KeyboardInterrupt as BaseException (delete the code below) in v1.7

726 except KeyboardInterrupt as exception:

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:812, in Trainer._fit_impl(self, model, train_dataloaders, val_dataloaders, datamodule, ckpt_path)

808 ckpt_path = ckpt_path or self.resume_from_checkpoint

809 self._ckpt_path = self.__set_ckpt_path(

810 ckpt_path, model_provided=True, model_connected=self.lightning_module is not None

811 )

--> 812 results = self._run(model, ckpt_path=self.ckpt_path)

814 assert self.state.stopped

815 self.training = False

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:1237, in Trainer._run(self, model, ckpt_path)

1233 self._checkpoint_connector.restore_training_state()

1235 self._checkpoint_connector.resume_end()

-> 1237 results = self._run_stage()

1239 log.detail(f"{self.class.name}: trainer tearing down")

1240 self._teardown()

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:1324, in Trainer._run_stage(self)

1322 if self.predicting:

1323 return self._run_predict()

-> 1324 return self._run_train()

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:1346, in Trainer._run_train(self)

1343 self._pre_training_routine()

1345 with isolate_rng():

-> 1346 self._run_sanity_check()

1348 # enable train mode

1349 self.model.train()

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:1414, in Trainer._run_sanity_check(self)

1412 # run eval step

1413 with torch.no_grad():

-> 1414 val_loop.run()

1416 self._call_callback_hooks("on_sanity_check_end")

1418 # reset logger connector

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\loops\base.py:204, in Loop.run(self, *args, **kwargs)

202 try:

203 self.on_advance_start(*args, **kwargs)

--> 204 self.advance(*args, **kwargs)

205 self.on_advance_end()

206 self._restarting = False

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\loops\dataloader\evaluation_loop.py:153, in EvaluationLoop.advance(self, *args, **kwargs)

151 if self.num_dataloaders > 1:

152 kwargs["dataloader_idx"] = dataloader_idx

--> 153 dl_outputs = self.epoch_loop.run(self._data_fetcher, dl_max_batches, kwargs)

155 # store batch level output per dataloader

156 self._outputs.append(dl_outputs)

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\loops\base.py:204, in Loop.run(self, *args, **kwargs)

202 try:

203 self.on_advance_start(*args, **kwargs)

--> 204 self.advance(*args, **kwargs)

205 self.on_advance_end()

206 self._restarting = False

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\loops\epoch\evaluation_epoch_loop.py:127, in EvaluationEpochLoop.advance(self, data_fetcher, dl_max_batches, kwargs)

124 self.batch_progress.increment_started()

126 # lightning module methods

--> 127 output = self._evaluation_step(**kwargs)

128 output = self._evaluation_step_end(output)

130 self.batch_progress.increment_processed()

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\loops\epoch\evaluation_epoch_loop.py:222, in EvaluationEpochLoop._evaluation_step(self, **kwargs)

220 output = self.trainer._call_strategy_hook("test_step", *kwargs.values())

221 else:

--> 222 output = self.trainer._call_strategy_hook("validation_step", *kwargs.values())

224 return output

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\trainer\trainer.py:1766, in Trainer._call_strategy_hook(self, hook_name, *args, **kwargs)

1763 return

1765 with self.profiler.profile(f"[Strategy]{self.strategy.class.name}.{hook_name}"):

-> 1766 output = fn(*args, **kwargs)

1768 # restore current_fx when nested context

1769 pl_module._current_fx_name = prev_fx_name

File ~.conda\envs\brady\lib\site-packages\pytorch_lightning\strategies\strategy.py:344, in Strategy.validation_step(self, *args, **kwargs)

339 """The actual validation step.

340

341 See :meth:

~pytorch_lightning.core.lightning.LightningModule.validation_stepfor more details342 """

343 with self.precision_plugin.val_step_context():

--> 344 return self.model.validation_step(*args, **kwargs)

y:_Brady\monai-unetr\unetr-lightning.ipynb Cell 13' in Net.validation_step(self, batch, batch_idx)

176 outputs = sliding_window_inference(

177 images, roi_size, sw_batch_size, self.forward

178 )

179 loss = self.loss_function(outputs, labels)

--> 180 outputs = [self.post_pred(i) for i in decollate_batch(outputs)]

181 labels = [self.post_label(i) for i in decollate_batch(labels)]

182 self.dice_metric(y_pred=outputs, y=labels)

y:_Brady\monai-unetr\unetr-lightning.ipynb Cell 13' in (.0)

176 outputs = sliding_window_inference(

177 images, roi_size, sw_batch_size, self.forward

178 )

179 loss = self.loss_function(outputs, labels)

--> 180 outputs = [self.post_pred(i) for i in decollate_batch(outputs)]

181 labels = [self.post_label(i) for i in decollate_batch(labels)]

182 self.dice_metric(y_pred=outputs, y=labels)

File ~.conda\envs\brady\lib\site-packages\monai\transforms\post\array.py:173, in AsDiscrete.call(self, img, argmax, to_onehot, n_classes, threshold_values, logit_thresh)

171 _nclasses = self.n_classes if n_classes is None else n_classes

172 if not isinstance(_nclasses, int):

--> 173 raise AssertionError("One of self.n_classes or n_classes must be an integer")

174 img = one_hot(img, num_classes=_nclasses, dim=0)

176 if threshold_values or self.threshold_values:

AssertionError: One of self.n_classes or n_classes must be an integer

Beta Was this translation helpful? Give feedback.

All reactions