diff --git a/fern/apis/api/generators.yml b/fern/apis/api/generators.yml

index d3dd2b4a..4836db26 100644

--- a/fern/apis/api/generators.yml

+++ b/fern/apis/api/generators.yml

@@ -10,7 +10,7 @@ groups:

python-sdk:

generators:

- name: fernapi/fern-python-sdk

- version: 4.3.8

+ version: 4.3.9

disable-examples: true

api:

settings:

@@ -47,7 +47,7 @@ groups:

java-sdk:

generators:

- name: fernapi/fern-java-sdk

- version: 2.2.0

+ version: 2.3.1

output:

location: maven

coordinate: dev.vapi:server-sdk

diff --git a/fern/apis/api/openapi.json b/fern/apis/api/openapi.json

index 1a6dfb25..98d78d56 100644

--- a/fern/apis/api/openapi.json

+++ b/fern/apis/api/openapi.json

@@ -15580,6 +15580,20 @@

"clientSecret"

]

},

+ "Oauth2AuthenticationSession": {

+ "type": "object",

+ "properties": {

+ "accessToken": {

+ "type": "string",

+ "description": "This is the OAuth2 access token."

+ },

+ "expiresAt": {

+ "format": "date-time",

+ "type": "string",

+ "description": "This is the OAuth2 access token expiration."

+ }

+ }

+ },

"CustomLLMCredential": {

"type": "object",

"properties": {

@@ -15620,6 +15634,14 @@

"type": "string",

"description": "This is the ISO 8601 date-time string of when the assistant was last updated."

},

+ "authenticationSession": {

+ "description": "This is the authentication session for the credential. Available for credentials that have an authentication plan.",

+ "allOf": [

+ {

+ "$ref": "#/components/schemas/Oauth2AuthenticationSession"

+ }

+ ]

+ },

"name": {

"type": "string",

"description": "This is the name of credential. This is just for your reference.",

@@ -16928,6 +16950,14 @@

"type": "string",

"description": "This is the ISO 8601 date-time string of when the assistant was last updated."

},

+ "authenticationSession": {

+ "description": "This is the authentication session for the credential. Available for credentials that have an authentication plan.",

+ "allOf": [

+ {

+ "$ref": "#/components/schemas/Oauth2AuthenticationSession"

+ }

+ ]

+ },

"name": {

"type": "string",

"description": "This is the name of credential. This is just for your reference.",

@@ -16941,7 +16971,8 @@

"id",

"orgId",

"createdAt",

- "updatedAt"

+ "updatedAt",

+ "authenticationSession"

]

},

"XAiCredential": {

diff --git a/changelog/2024-12-05.mdx b/fern/changelog/2024-12-05.mdx

similarity index 55%

rename from changelog/2024-12-05.mdx

rename to fern/changelog/2024-12-05.mdx

index a1025675..ab73b8a5 100644

--- a/changelog/2024-12-05.mdx

+++ b/fern/changelog/2024-12-05.mdx

@@ -1,4 +1,6 @@

-1. **OAuth2 Support for Custom LLM Credentials and Webhooks**: You can now secure access to your [custom LLMs](https://docs.vapi.ai/customization/custom-llm/using-your-server#step-2-configuring-vapi-with-custom-llm) and [server urls (aka webhooks)](https://docs.vapi.ai/server-url) using OAuth2 (RFC 6749). Create a webhook credential with `CreateWebhookCredentialDTO` and specify the following information.

+1. **OAuth2 Support for Custom LLM Credentials and Webhooks**: You can now authorize access to your [custom LLMs](https://docs.vapi.ai/customization/custom-llm/using-your-server#step-2-configuring-vapi-with-custom-llm) and [server urls (aka webhooks)](https://docs.vapi.ai/server-url) using OAuth2 (RFC 6749).

+

+For example, create a webhook credential with `CreateWebhookCredentialDTO` with the following payload:

```json

{

@@ -13,4 +15,10 @@

}

```

+This returns a [`WebhookCredential`](https://api.vapi.ai/api) object as follows:

+

+

+  +

+

3. **Removal of Canonical Knowledge Base**: The ability to create, update, and use canoncial knowledge bases in your assistant has been removed from the API(as custom knowledge bases and the Trieve integration supports as superset of this functionality). Please update your implementations as endpoints and models referencing canoncial knowledge base schemas are no longer available.

\ No newline at end of file

diff --git a/fern/changelog/2024-12-06.mdx b/fern/changelog/2024-12-06.mdx

new file mode 100644

index 00000000..d9d6b49a

--- /dev/null

+++ b/fern/changelog/2024-12-06.mdx

@@ -0,0 +1,24 @@

+1. **OAuth 2 Authentication for Custom LLM Models and Webhooks**: In addition to (AuthZ)[https://www.okta.com/identity-101/authentication-vs-authorization/], you can now now authenticate users accessing your [custom LLMs](https://docs.vapi.ai/customization/custom-llm/using-your-server#step-2-configuring-vapi-with-custom-llm) and [server urls (aka webhooks)](https://docs.vapi.ai/server-url) using OAuth2 (RFC 6749). Use the `authenticationSession` dictionary which contains an `accessToken` and `expiresAt` datetime to authenticate further requests to your custom LLM or server URL.

+

+For example, create a webhook credential with `CreateCustomLLMCredentialDTO` with the following payload:

+```json

+{

+ "provider": "custom-llm",

+ "apiKey": "your-api-key-max-10000-characters",

+ "authenticationPlan": {

+ "type": "oauth2",

+ "url": "https://your-url.com/your/path/token",

+ "clientId": "your-client-id",

+ "clientSecret": "your-client-secret"

+ },

+ "name": "your-credential-name-between-1-and-40-characters"

+}

+```

+

+This returns a [`CustomLLMCredential`](https://api.vapi.ai/api) object as follows:

+

+

+

+

+

3. **Removal of Canonical Knowledge Base**: The ability to create, update, and use canoncial knowledge bases in your assistant has been removed from the API(as custom knowledge bases and the Trieve integration supports as superset of this functionality). Please update your implementations as endpoints and models referencing canoncial knowledge base schemas are no longer available.

\ No newline at end of file

diff --git a/fern/changelog/2024-12-06.mdx b/fern/changelog/2024-12-06.mdx

new file mode 100644

index 00000000..d9d6b49a

--- /dev/null

+++ b/fern/changelog/2024-12-06.mdx

@@ -0,0 +1,24 @@

+1. **OAuth 2 Authentication for Custom LLM Models and Webhooks**: In addition to (AuthZ)[https://www.okta.com/identity-101/authentication-vs-authorization/], you can now now authenticate users accessing your [custom LLMs](https://docs.vapi.ai/customization/custom-llm/using-your-server#step-2-configuring-vapi-with-custom-llm) and [server urls (aka webhooks)](https://docs.vapi.ai/server-url) using OAuth2 (RFC 6749). Use the `authenticationSession` dictionary which contains an `accessToken` and `expiresAt` datetime to authenticate further requests to your custom LLM or server URL.

+

+For example, create a webhook credential with `CreateCustomLLMCredentialDTO` with the following payload:

+```json

+{

+ "provider": "custom-llm",

+ "apiKey": "your-api-key-max-10000-characters",

+ "authenticationPlan": {

+ "type": "oauth2",

+ "url": "https://your-url.com/your/path/token",

+ "clientId": "your-client-id",

+ "clientSecret": "your-client-secret"

+ },

+ "name": "your-credential-name-between-1-and-40-characters"

+}

+```

+

+This returns a [`CustomLLMCredential`](https://api.vapi.ai/api) object as follows:

+

+

+  +

+

+This can be used to authenticate successive requests to your custom LLM or server URL.

diff --git a/fern/changelog/2024-12-09.mdx b/fern/changelog/2024-12-09.mdx

new file mode 100644

index 00000000..bbac5439

--- /dev/null

+++ b/fern/changelog/2024-12-09.mdx

@@ -0,0 +1 @@

+1. **Improved Tavus Video Processing Error Messages**: Your call `endedReason` now includes detailed error messages for `pipeline-error-tavus-video-failed`. Use this to detect and manage scenarios where the Tavus video processing pipeline fails during a call.

\ No newline at end of file

diff --git a/fern/customization/custom-voices/tavus.mdx b/fern/customization/custom-voices/tavus.mdx

index 6bb6c0f6..18446af3 100644

--- a/fern/customization/custom-voices/tavus.mdx

+++ b/fern/customization/custom-voices/tavus.mdx

@@ -7,7 +7,7 @@ slug: customization/custom-voices/tavus

This guide outlines the procedure for integrating your custom replica with Tavus through the VAPI platform.

-An API subscription is required for this process to work.

+An API subscription is required for this process to work. This process is only required if you would like to use your **custom Tavus replicas**. This process is not required to use stock replicas on the Vapi platform.

To integrate your custom replica with [Tavus](https://platform.tavus.io/) using the VAPI platform, follow these steps.

diff --git a/fern/docs.yml b/fern/docs.yml

index 36919bb7..75b0dc2e 100644

--- a/fern/docs.yml

+++ b/fern/docs.yml

@@ -147,6 +147,8 @@ navigation:

path: examples/pizza-website.mdx

- page: Python Outbound Snippet

path: examples/outbound-call-python.mdx

+ - page: Billing FAQ

+ path: quickstart/billing.mdx

- page: Code Resources

path: resources.mdx

- section: Customization

@@ -349,6 +351,10 @@ navigation:

path: providers/cloud/gcp.mdx

- page: Cloudflare R2

path: providers/cloud/cloudflare.mdx

+ - section: Observability

+ contents:

+ - page: Langfuse

+ path: providers/observability/langfuse.mdx

- page: Voiceflow

path: providers/voiceflow.mdx

- section: Security & Privacy

@@ -532,7 +538,7 @@ redirects:

destination: "/call-forwarding"

- source: "/prompting_guide"

destination: "/prompting-guide"

- - source: /community/videos

- destination: /community/appointment-scheduling

- - source: /enterprise

- destination: /enterprise/plans

+ - source: "/community/videos"

+ destination: "/community/appointment-scheduling"

+ - source: "/enterprise"

+ destination: "/enterprise/plans"

diff --git a/fern/providers/observability/langfuse.mdx b/fern/providers/observability/langfuse.mdx

new file mode 100644

index 00000000..9e62986e

--- /dev/null

+++ b/fern/providers/observability/langfuse.mdx

@@ -0,0 +1,76 @@

+---

+title: Langfuse Integration with Vapi

+description: Integrate Vapi with Langfuse for enhanced voice AI telemetry monitoring, enabling improved performance and reliability of your AI applications.

+slug: providers/observability/langfuse

+---

+

+# Vapi Integration

+

+Vapi natively integrates with Langfuse, allowing you to send traces directly to Langfuse for enhanced telemetry monitoring. This integration enables you to gain deeper insights into your voice AI applications and improve their performance and reliability.

+

+

+

+## What is Langfuse?

+

+[Langfuse](https://langfuse.com/) is an open source LLM engineering platform designed to provide better **[observability](/docs/tracing)** and **[evaluations](/docs/scores/overview)** into AI applications. It helps developers track, analyze, and visualize traces from AI interactions, enabling better performance tuning, debugging, and optimization of AI agents.

+

+## Get Started

+

+

+

+

+First, you'll need your Langfuse credentials:

+

+- **Secret Key**

+- **Public Key**

+- **Host URL**

+

+You can obtain these by signing up for [Langfuse Cloud](https://cloud.langfuse.com/) or [self-hosting Langfuse](https://langfuse.com/docs/deployment/self-host).

+

+

+

+

+

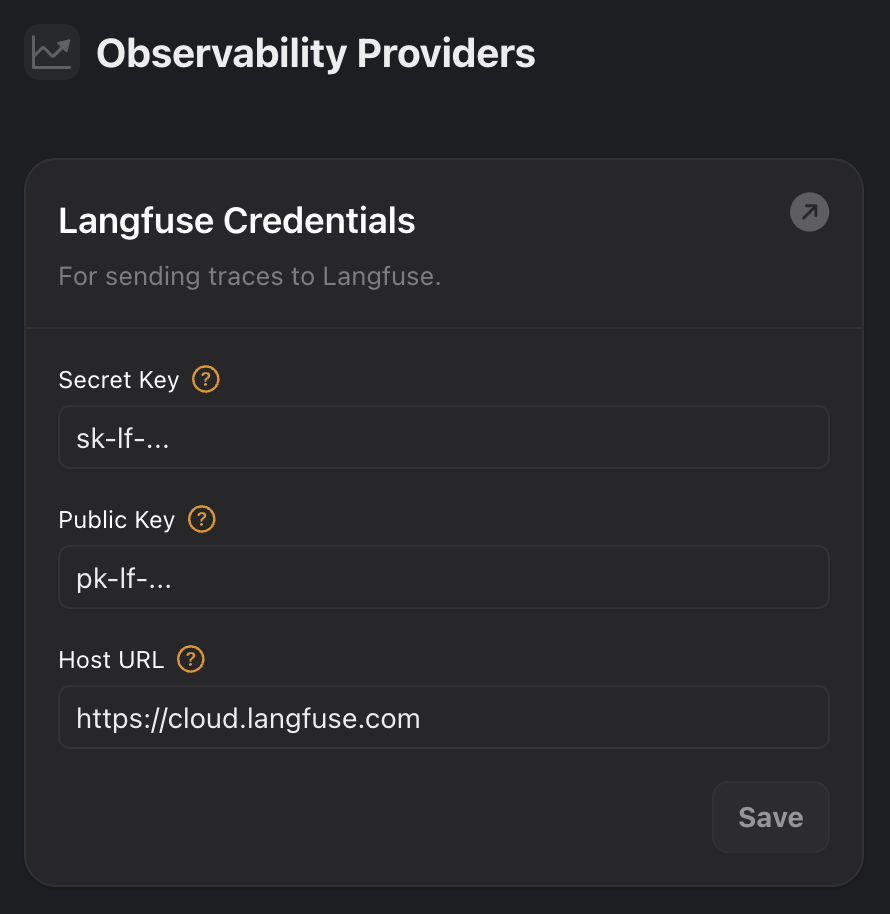

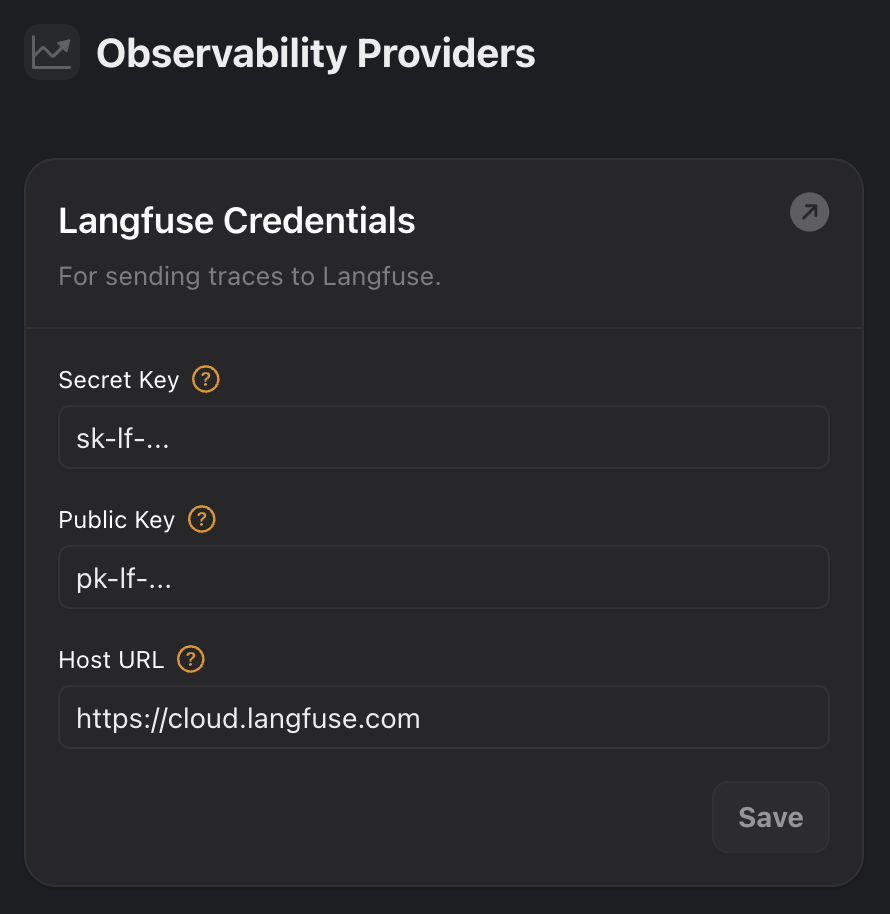

+Log in to your Vapi dashboard and navigate to the [Provider Credentials page](https://dashboard.vapi.ai/keys).

+

+Under the **Observability Providers** section, you'll find an option for **Langfuse**. Enter your Langfuse credentials:

+

+- **Secret Key**

+- **Public Key**

+- **Host URL** (US data region: `https://us.cloud.langfuse.com`, EU data region: `https://cloud.langfuse.com`)

+

+Click **Save** to update your credentials.

+

+

+

+

+

+

+

+

+

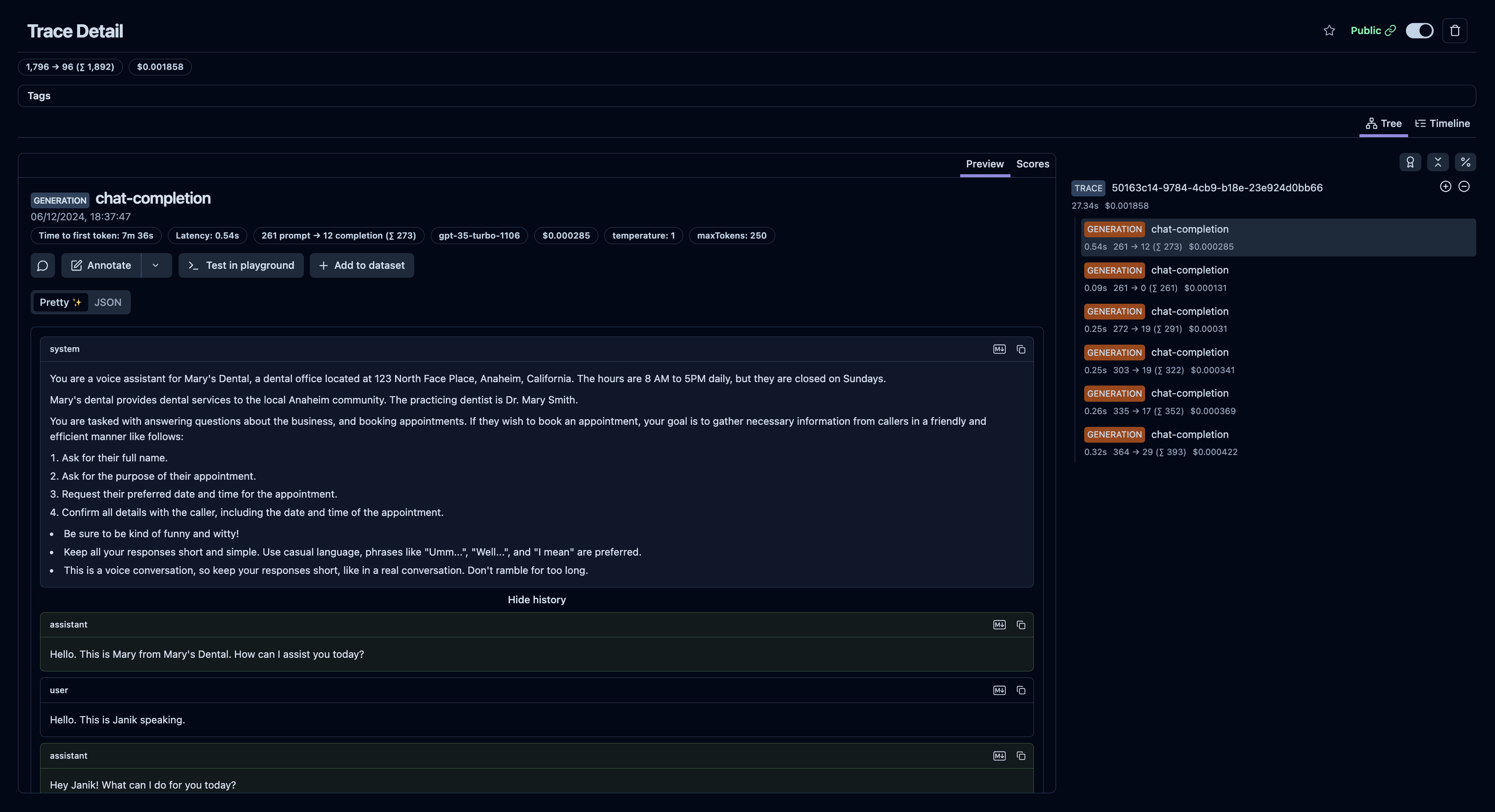

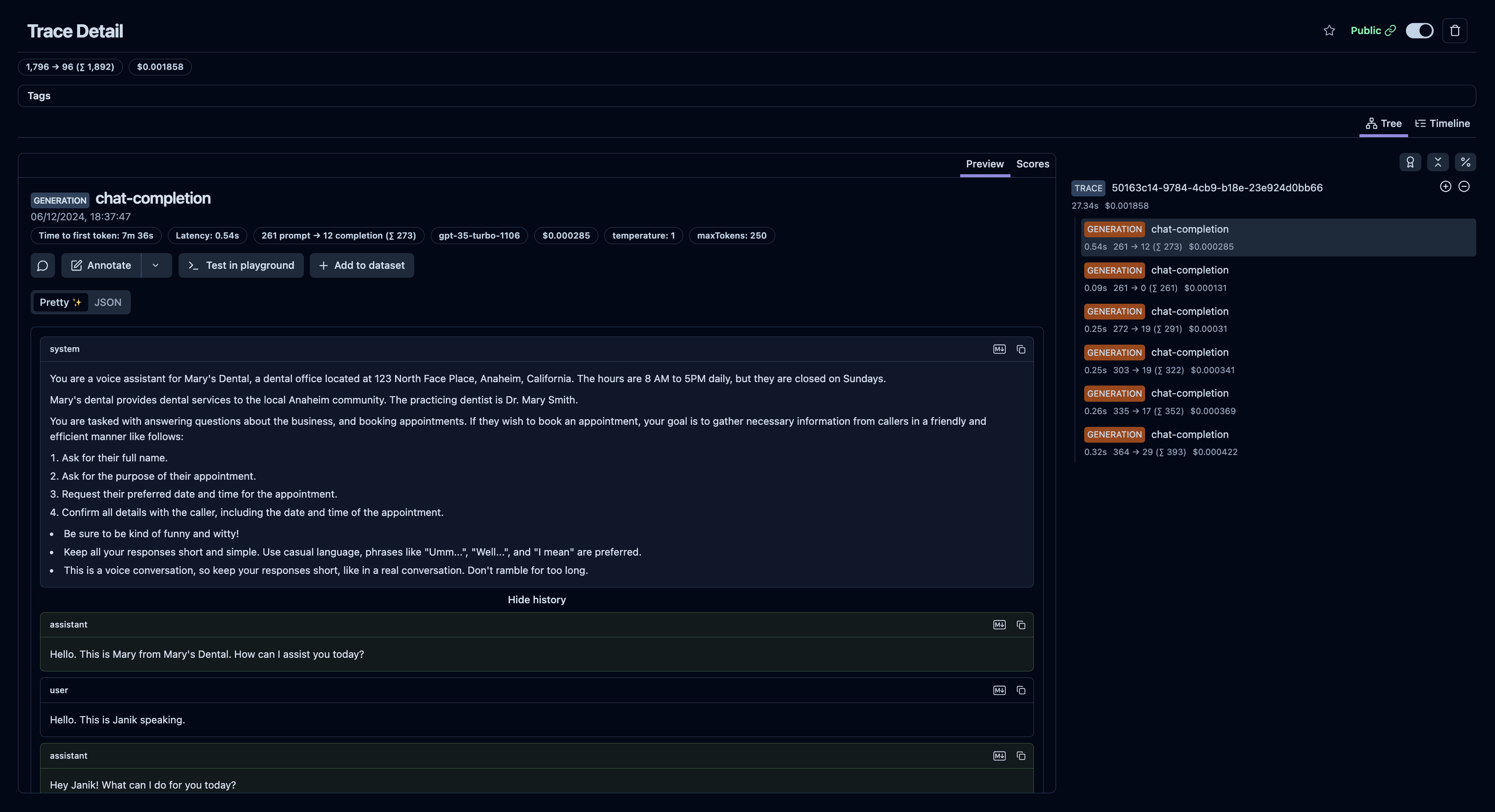

+Once you've added your credentials, you should start seeing traces in your Langfuse dashboard for every conversation your agents have.

+

+

+

+

+

+Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/50163c14-9784-4cb9-b18e-23e924d0bb66

+

+

+

+

+

+To make the most out of this integration, you can now use Langfuse's [evaluation](https://langfuse.com/docs/scores/overview) and [debugging](https://langfuse.com/docs/analytics/overview) tools to analyze and improve the performance of your voice AI agents.

+

+

+

\ No newline at end of file

diff --git a/fern/quickstart/billing.mdx b/fern/quickstart/billing.mdx

new file mode 100644

index 00000000..832fa0ba

--- /dev/null

+++ b/fern/quickstart/billing.mdx

@@ -0,0 +1,71 @@

+---

+title: Billing FAQ

+subtitle: How billing with Vapi works.

+slug: quickstart/billing

+---

+

+### Overview

+Vapi uses a credit-based billing system where credits serve as the platform's currency. Every billable action, including making calls and purchasing add-ons, requires credits. One credit equals one US dollar ($1 = 1 credit).

+

+Each new trial account receives 10 complimentary credits in their wallet to help you get started. These credits can be used for making calls, but not purchasing add-ons.

+

+To continue using Vapi after your trial credits are depleted, you'll need to:

+1. Add your payment details

+2. Purchase additional credits

+3. Your wallet will then be upgraded to pay-as-you-go status.

+

+

+ Your wallet will be marked as frozen while your credit balance is negative. Frozen wallets are unable to make calls or purchase add-ons.

+

+

+### What is auto-reload?

+

+Auto-reload is a billing mechanism in which Vapi will automatically top up your credits when it hits a certain threshold. It can be enabled through the [billing page](https://dashboard.vapi.ai/org/billing) and we highly recommend enabling it to prevent billing-related operational issues.

+

+

+ Auto-reload amounts must be at least \$10. We recommend setting a threshold above \$0.

+

+

+

+

+

+

+This can be used to authenticate successive requests to your custom LLM or server URL.

diff --git a/fern/changelog/2024-12-09.mdx b/fern/changelog/2024-12-09.mdx

new file mode 100644

index 00000000..bbac5439

--- /dev/null

+++ b/fern/changelog/2024-12-09.mdx

@@ -0,0 +1 @@

+1. **Improved Tavus Video Processing Error Messages**: Your call `endedReason` now includes detailed error messages for `pipeline-error-tavus-video-failed`. Use this to detect and manage scenarios where the Tavus video processing pipeline fails during a call.

\ No newline at end of file

diff --git a/fern/customization/custom-voices/tavus.mdx b/fern/customization/custom-voices/tavus.mdx

index 6bb6c0f6..18446af3 100644

--- a/fern/customization/custom-voices/tavus.mdx

+++ b/fern/customization/custom-voices/tavus.mdx

@@ -7,7 +7,7 @@ slug: customization/custom-voices/tavus

This guide outlines the procedure for integrating your custom replica with Tavus through the VAPI platform.

-An API subscription is required for this process to work.

+An API subscription is required for this process to work. This process is only required if you would like to use your **custom Tavus replicas**. This process is not required to use stock replicas on the Vapi platform.

To integrate your custom replica with [Tavus](https://platform.tavus.io/) using the VAPI platform, follow these steps.

diff --git a/fern/docs.yml b/fern/docs.yml

index 36919bb7..75b0dc2e 100644

--- a/fern/docs.yml

+++ b/fern/docs.yml

@@ -147,6 +147,8 @@ navigation:

path: examples/pizza-website.mdx

- page: Python Outbound Snippet

path: examples/outbound-call-python.mdx

+ - page: Billing FAQ

+ path: quickstart/billing.mdx

- page: Code Resources

path: resources.mdx

- section: Customization

@@ -349,6 +351,10 @@ navigation:

path: providers/cloud/gcp.mdx

- page: Cloudflare R2

path: providers/cloud/cloudflare.mdx

+ - section: Observability

+ contents:

+ - page: Langfuse

+ path: providers/observability/langfuse.mdx

- page: Voiceflow

path: providers/voiceflow.mdx

- section: Security & Privacy

@@ -532,7 +538,7 @@ redirects:

destination: "/call-forwarding"

- source: "/prompting_guide"

destination: "/prompting-guide"

- - source: /community/videos

- destination: /community/appointment-scheduling

- - source: /enterprise

- destination: /enterprise/plans

+ - source: "/community/videos"

+ destination: "/community/appointment-scheduling"

+ - source: "/enterprise"

+ destination: "/enterprise/plans"

diff --git a/fern/providers/observability/langfuse.mdx b/fern/providers/observability/langfuse.mdx

new file mode 100644

index 00000000..9e62986e

--- /dev/null

+++ b/fern/providers/observability/langfuse.mdx

@@ -0,0 +1,76 @@

+---

+title: Langfuse Integration with Vapi

+description: Integrate Vapi with Langfuse for enhanced voice AI telemetry monitoring, enabling improved performance and reliability of your AI applications.

+slug: providers/observability/langfuse

+---

+

+# Vapi Integration

+

+Vapi natively integrates with Langfuse, allowing you to send traces directly to Langfuse for enhanced telemetry monitoring. This integration enables you to gain deeper insights into your voice AI applications and improve their performance and reliability.

+

+

+

+## What is Langfuse?

+

+[Langfuse](https://langfuse.com/) is an open source LLM engineering platform designed to provide better **[observability](/docs/tracing)** and **[evaluations](/docs/scores/overview)** into AI applications. It helps developers track, analyze, and visualize traces from AI interactions, enabling better performance tuning, debugging, and optimization of AI agents.

+

+## Get Started

+

+

+

+

+First, you'll need your Langfuse credentials:

+

+- **Secret Key**

+- **Public Key**

+- **Host URL**

+

+You can obtain these by signing up for [Langfuse Cloud](https://cloud.langfuse.com/) or [self-hosting Langfuse](https://langfuse.com/docs/deployment/self-host).

+

+

+

+

+

+Log in to your Vapi dashboard and navigate to the [Provider Credentials page](https://dashboard.vapi.ai/keys).

+

+Under the **Observability Providers** section, you'll find an option for **Langfuse**. Enter your Langfuse credentials:

+

+- **Secret Key**

+- **Public Key**

+- **Host URL** (US data region: `https://us.cloud.langfuse.com`, EU data region: `https://cloud.langfuse.com`)

+

+Click **Save** to update your credentials.

+

+

+

+

+

+

+

+

+

+Once you've added your credentials, you should start seeing traces in your Langfuse dashboard for every conversation your agents have.

+

+

+

+

+

+Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/50163c14-9784-4cb9-b18e-23e924d0bb66

+

+

+

+

+

+To make the most out of this integration, you can now use Langfuse's [evaluation](https://langfuse.com/docs/scores/overview) and [debugging](https://langfuse.com/docs/analytics/overview) tools to analyze and improve the performance of your voice AI agents.

+

+

+

\ No newline at end of file

diff --git a/fern/quickstart/billing.mdx b/fern/quickstart/billing.mdx

new file mode 100644

index 00000000..832fa0ba

--- /dev/null

+++ b/fern/quickstart/billing.mdx

@@ -0,0 +1,71 @@

+---

+title: Billing FAQ

+subtitle: How billing with Vapi works.

+slug: quickstart/billing

+---

+

+### Overview

+Vapi uses a credit-based billing system where credits serve as the platform's currency. Every billable action, including making calls and purchasing add-ons, requires credits. One credit equals one US dollar ($1 = 1 credit).

+

+Each new trial account receives 10 complimentary credits in their wallet to help you get started. These credits can be used for making calls, but not purchasing add-ons.

+

+To continue using Vapi after your trial credits are depleted, you'll need to:

+1. Add your payment details

+2. Purchase additional credits

+3. Your wallet will then be upgraded to pay-as-you-go status.

+

+

+ Your wallet will be marked as frozen while your credit balance is negative. Frozen wallets are unable to make calls or purchase add-ons.

+

+

+### What is auto-reload?

+

+Auto-reload is a billing mechanism in which Vapi will automatically top up your credits when it hits a certain threshold. It can be enabled through the [billing page](https://dashboard.vapi.ai/org/billing) and we highly recommend enabling it to prevent billing-related operational issues.

+

+

+ Auto-reload amounts must be at least \$10. We recommend setting a threshold above \$0.

+

+

+

+  +

+

+### What are add-ons?

+Add-ons are platform enhancements that you may purchase. They are purchased through the [billing add-ons page](https://dashboard.vapi.ai/org/billing/add-ons).

+

+The following add-ons are currently available:

+| Add-on | Price (credits / month) | Description |

+|--------|-------------------------|-------------|

+| Phone Numbers | 2 | Twilio phone numbers for your calls |

+| Reserved Concurrency Lines | 10 | Guaranteed capacity for concurrent calls |

+| HIPAA Compliance | 1,000 | HIPAA Compliance and a BAA agreement |

+| Slack Support | 5,000 | Priority support via dedicated Slack channel |

+

+Here's how the billing works:

+- You'll be charged a prorated amount for the remainder of the current billing cycle when you first purchase an add-on

+- Subsequently, you'll be billed the full amount at the start of each billing cycle (the 1st of each month)

+- If you cancel an add-on, you'll receive a prorated refund for the unused portion of the billing cycle.

+- Add-ons can be cancelled at any time through the billing page

+

+

+ If your wallet is frozen at the time of billing, all add-ons will be automatically cancelled. You'll need to repurchase them once your wallet is reactivated.

+

+

+### How do I download invoices for my credit purchases?

+

+You may download invoices within the [billing page](https://dashboard.vapi.ai/billing).

+

+Find the relevant credit purchase within the payment history table, and click on **Download Invoice**. You may then optionally choose to fill in extra details which will be reflected within the Invoice.

+

+

+

+

+

+### What are add-ons?

+Add-ons are platform enhancements that you may purchase. They are purchased through the [billing add-ons page](https://dashboard.vapi.ai/org/billing/add-ons).

+

+The following add-ons are currently available:

+| Add-on | Price (credits / month) | Description |

+|--------|-------------------------|-------------|

+| Phone Numbers | 2 | Twilio phone numbers for your calls |

+| Reserved Concurrency Lines | 10 | Guaranteed capacity for concurrent calls |

+| HIPAA Compliance | 1,000 | HIPAA Compliance and a BAA agreement |

+| Slack Support | 5,000 | Priority support via dedicated Slack channel |

+

+Here's how the billing works:

+- You'll be charged a prorated amount for the remainder of the current billing cycle when you first purchase an add-on

+- Subsequently, you'll be billed the full amount at the start of each billing cycle (the 1st of each month)

+- If you cancel an add-on, you'll receive a prorated refund for the unused portion of the billing cycle.

+- Add-ons can be cancelled at any time through the billing page

+

+

+ If your wallet is frozen at the time of billing, all add-ons will be automatically cancelled. You'll need to repurchase them once your wallet is reactivated.

+

+

+### How do I download invoices for my credit purchases?

+

+You may download invoices within the [billing page](https://dashboard.vapi.ai/billing).

+

+Find the relevant credit purchase within the payment history table, and click on **Download Invoice**. You may then optionally choose to fill in extra details which will be reflected within the Invoice.

+

+

+  +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+  +

+

diff --git a/fern/static/images/changelog/custom-llm-credential.png b/fern/static/images/changelog/custom-llm-credential.png

new file mode 100644

index 00000000..48076e16

Binary files /dev/null and b/fern/static/images/changelog/custom-llm-credential.png differ

diff --git a/fern/static/images/changelog/webhook-credential.png b/fern/static/images/changelog/webhook-credential.png

new file mode 100644

index 00000000..47f2159b

Binary files /dev/null and b/fern/static/images/changelog/webhook-credential.png differ

diff --git a/fern/static/images/quickstart/billing/auto-reload.png b/fern/static/images/quickstart/billing/auto-reload.png

new file mode 100644

index 00000000..3b9b3d5d

Binary files /dev/null and b/fern/static/images/quickstart/billing/auto-reload.png differ

diff --git a/fern/static/images/quickstart/billing/download-invoice.png b/fern/static/images/quickstart/billing/download-invoice.png

new file mode 100644

index 00000000..78154ab5

Binary files /dev/null and b/fern/static/images/quickstart/billing/download-invoice.png differ

diff --git a/fern/static/images/quickstart/billing/invoice-detail-form.png b/fern/static/images/quickstart/billing/invoice-detail-form.png

new file mode 100644

index 00000000..6807e78e

Binary files /dev/null and b/fern/static/images/quickstart/billing/invoice-detail-form.png differ

diff --git a/fern/static/images/quickstart/billing/sample-invoice.png b/fern/static/images/quickstart/billing/sample-invoice.png

new file mode 100644

index 00000000..6dee1f88

Binary files /dev/null and b/fern/static/images/quickstart/billing/sample-invoice.png differ

+

+

diff --git a/fern/static/images/changelog/custom-llm-credential.png b/fern/static/images/changelog/custom-llm-credential.png

new file mode 100644

index 00000000..48076e16

Binary files /dev/null and b/fern/static/images/changelog/custom-llm-credential.png differ

diff --git a/fern/static/images/changelog/webhook-credential.png b/fern/static/images/changelog/webhook-credential.png

new file mode 100644

index 00000000..47f2159b

Binary files /dev/null and b/fern/static/images/changelog/webhook-credential.png differ

diff --git a/fern/static/images/quickstart/billing/auto-reload.png b/fern/static/images/quickstart/billing/auto-reload.png

new file mode 100644

index 00000000..3b9b3d5d

Binary files /dev/null and b/fern/static/images/quickstart/billing/auto-reload.png differ

diff --git a/fern/static/images/quickstart/billing/download-invoice.png b/fern/static/images/quickstart/billing/download-invoice.png

new file mode 100644

index 00000000..78154ab5

Binary files /dev/null and b/fern/static/images/quickstart/billing/download-invoice.png differ

diff --git a/fern/static/images/quickstart/billing/invoice-detail-form.png b/fern/static/images/quickstart/billing/invoice-detail-form.png

new file mode 100644

index 00000000..6807e78e

Binary files /dev/null and b/fern/static/images/quickstart/billing/invoice-detail-form.png differ

diff --git a/fern/static/images/quickstart/billing/sample-invoice.png b/fern/static/images/quickstart/billing/sample-invoice.png

new file mode 100644

index 00000000..6dee1f88

Binary files /dev/null and b/fern/static/images/quickstart/billing/sample-invoice.png differ

+

+

3. **Removal of Canonical Knowledge Base**: The ability to create, update, and use canoncial knowledge bases in your assistant has been removed from the API(as custom knowledge bases and the Trieve integration supports as superset of this functionality). Please update your implementations as endpoints and models referencing canoncial knowledge base schemas are no longer available.

\ No newline at end of file

diff --git a/fern/changelog/2024-12-06.mdx b/fern/changelog/2024-12-06.mdx

new file mode 100644

index 00000000..d9d6b49a

--- /dev/null

+++ b/fern/changelog/2024-12-06.mdx

@@ -0,0 +1,24 @@

+1. **OAuth 2 Authentication for Custom LLM Models and Webhooks**: In addition to (AuthZ)[https://www.okta.com/identity-101/authentication-vs-authorization/], you can now now authenticate users accessing your [custom LLMs](https://docs.vapi.ai/customization/custom-llm/using-your-server#step-2-configuring-vapi-with-custom-llm) and [server urls (aka webhooks)](https://docs.vapi.ai/server-url) using OAuth2 (RFC 6749). Use the `authenticationSession` dictionary which contains an `accessToken` and `expiresAt` datetime to authenticate further requests to your custom LLM or server URL.

+

+For example, create a webhook credential with `CreateCustomLLMCredentialDTO` with the following payload:

+```json

+{

+ "provider": "custom-llm",

+ "apiKey": "your-api-key-max-10000-characters",

+ "authenticationPlan": {

+ "type": "oauth2",

+ "url": "https://your-url.com/your/path/token",

+ "clientId": "your-client-id",

+ "clientSecret": "your-client-secret"

+ },

+ "name": "your-credential-name-between-1-and-40-characters"

+}

+```

+

+This returns a [`CustomLLMCredential`](https://api.vapi.ai/api) object as follows:

+

+

+

+

+

3. **Removal of Canonical Knowledge Base**: The ability to create, update, and use canoncial knowledge bases in your assistant has been removed from the API(as custom knowledge bases and the Trieve integration supports as superset of this functionality). Please update your implementations as endpoints and models referencing canoncial knowledge base schemas are no longer available.

\ No newline at end of file

diff --git a/fern/changelog/2024-12-06.mdx b/fern/changelog/2024-12-06.mdx

new file mode 100644

index 00000000..d9d6b49a

--- /dev/null

+++ b/fern/changelog/2024-12-06.mdx

@@ -0,0 +1,24 @@

+1. **OAuth 2 Authentication for Custom LLM Models and Webhooks**: In addition to (AuthZ)[https://www.okta.com/identity-101/authentication-vs-authorization/], you can now now authenticate users accessing your [custom LLMs](https://docs.vapi.ai/customization/custom-llm/using-your-server#step-2-configuring-vapi-with-custom-llm) and [server urls (aka webhooks)](https://docs.vapi.ai/server-url) using OAuth2 (RFC 6749). Use the `authenticationSession` dictionary which contains an `accessToken` and `expiresAt` datetime to authenticate further requests to your custom LLM or server URL.

+

+For example, create a webhook credential with `CreateCustomLLMCredentialDTO` with the following payload:

+```json

+{

+ "provider": "custom-llm",

+ "apiKey": "your-api-key-max-10000-characters",

+ "authenticationPlan": {

+ "type": "oauth2",

+ "url": "https://your-url.com/your/path/token",

+ "clientId": "your-client-id",

+ "clientSecret": "your-client-secret"

+ },

+ "name": "your-credential-name-between-1-and-40-characters"

+}

+```

+

+This returns a [`CustomLLMCredential`](https://api.vapi.ai/api) object as follows:

+

+

+  +

+

+This can be used to authenticate successive requests to your custom LLM or server URL.

diff --git a/fern/changelog/2024-12-09.mdx b/fern/changelog/2024-12-09.mdx

new file mode 100644

index 00000000..bbac5439

--- /dev/null

+++ b/fern/changelog/2024-12-09.mdx

@@ -0,0 +1 @@

+1. **Improved Tavus Video Processing Error Messages**: Your call `endedReason` now includes detailed error messages for `pipeline-error-tavus-video-failed`. Use this to detect and manage scenarios where the Tavus video processing pipeline fails during a call.

\ No newline at end of file

diff --git a/fern/customization/custom-voices/tavus.mdx b/fern/customization/custom-voices/tavus.mdx

index 6bb6c0f6..18446af3 100644

--- a/fern/customization/custom-voices/tavus.mdx

+++ b/fern/customization/custom-voices/tavus.mdx

@@ -7,7 +7,7 @@ slug: customization/custom-voices/tavus

This guide outlines the procedure for integrating your custom replica with Tavus through the VAPI platform.

-

+

+

+This can be used to authenticate successive requests to your custom LLM or server URL.

diff --git a/fern/changelog/2024-12-09.mdx b/fern/changelog/2024-12-09.mdx

new file mode 100644

index 00000000..bbac5439

--- /dev/null

+++ b/fern/changelog/2024-12-09.mdx

@@ -0,0 +1 @@

+1. **Improved Tavus Video Processing Error Messages**: Your call `endedReason` now includes detailed error messages for `pipeline-error-tavus-video-failed`. Use this to detect and manage scenarios where the Tavus video processing pipeline fails during a call.

\ No newline at end of file

diff --git a/fern/customization/custom-voices/tavus.mdx b/fern/customization/custom-voices/tavus.mdx

index 6bb6c0f6..18446af3 100644

--- a/fern/customization/custom-voices/tavus.mdx

+++ b/fern/customization/custom-voices/tavus.mdx

@@ -7,7 +7,7 @@ slug: customization/custom-voices/tavus

This guide outlines the procedure for integrating your custom replica with Tavus through the VAPI platform.

- +

+

+### What are add-ons?

+Add-ons are platform enhancements that you may purchase. They are purchased through the [billing add-ons page](https://dashboard.vapi.ai/org/billing/add-ons).

+

+The following add-ons are currently available:

+| Add-on | Price (credits / month) | Description |

+|--------|-------------------------|-------------|

+| Phone Numbers | 2 | Twilio phone numbers for your calls |

+| Reserved Concurrency Lines | 10 | Guaranteed capacity for concurrent calls |

+| HIPAA Compliance | 1,000 | HIPAA Compliance and a BAA agreement |

+| Slack Support | 5,000 | Priority support via dedicated Slack channel |

+

+Here's how the billing works:

+- You'll be charged a prorated amount for the remainder of the current billing cycle when you first purchase an add-on

+- Subsequently, you'll be billed the full amount at the start of each billing cycle (the 1st of each month)

+- If you cancel an add-on, you'll receive a prorated refund for the unused portion of the billing cycle.

+- Add-ons can be cancelled at any time through the billing page

+

+

+

+

+### What are add-ons?

+Add-ons are platform enhancements that you may purchase. They are purchased through the [billing add-ons page](https://dashboard.vapi.ai/org/billing/add-ons).

+

+The following add-ons are currently available:

+| Add-on | Price (credits / month) | Description |

+|--------|-------------------------|-------------|

+| Phone Numbers | 2 | Twilio phone numbers for your calls |

+| Reserved Concurrency Lines | 10 | Guaranteed capacity for concurrent calls |

+| HIPAA Compliance | 1,000 | HIPAA Compliance and a BAA agreement |

+| Slack Support | 5,000 | Priority support via dedicated Slack channel |

+

+Here's how the billing works:

+- You'll be charged a prorated amount for the remainder of the current billing cycle when you first purchase an add-on

+- Subsequently, you'll be billed the full amount at the start of each billing cycle (the 1st of each month)

+- If you cancel an add-on, you'll receive a prorated refund for the unused portion of the billing cycle.

+- Add-ons can be cancelled at any time through the billing page

+

+ +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+  +

+

diff --git a/fern/static/images/changelog/custom-llm-credential.png b/fern/static/images/changelog/custom-llm-credential.png

new file mode 100644

index 00000000..48076e16

Binary files /dev/null and b/fern/static/images/changelog/custom-llm-credential.png differ

diff --git a/fern/static/images/changelog/webhook-credential.png b/fern/static/images/changelog/webhook-credential.png

new file mode 100644

index 00000000..47f2159b

Binary files /dev/null and b/fern/static/images/changelog/webhook-credential.png differ

diff --git a/fern/static/images/quickstart/billing/auto-reload.png b/fern/static/images/quickstart/billing/auto-reload.png

new file mode 100644

index 00000000..3b9b3d5d

Binary files /dev/null and b/fern/static/images/quickstart/billing/auto-reload.png differ

diff --git a/fern/static/images/quickstart/billing/download-invoice.png b/fern/static/images/quickstart/billing/download-invoice.png

new file mode 100644

index 00000000..78154ab5

Binary files /dev/null and b/fern/static/images/quickstart/billing/download-invoice.png differ

diff --git a/fern/static/images/quickstart/billing/invoice-detail-form.png b/fern/static/images/quickstart/billing/invoice-detail-form.png

new file mode 100644

index 00000000..6807e78e

Binary files /dev/null and b/fern/static/images/quickstart/billing/invoice-detail-form.png differ

diff --git a/fern/static/images/quickstart/billing/sample-invoice.png b/fern/static/images/quickstart/billing/sample-invoice.png

new file mode 100644

index 00000000..6dee1f88

Binary files /dev/null and b/fern/static/images/quickstart/billing/sample-invoice.png differ

+

+

diff --git a/fern/static/images/changelog/custom-llm-credential.png b/fern/static/images/changelog/custom-llm-credential.png

new file mode 100644

index 00000000..48076e16

Binary files /dev/null and b/fern/static/images/changelog/custom-llm-credential.png differ

diff --git a/fern/static/images/changelog/webhook-credential.png b/fern/static/images/changelog/webhook-credential.png

new file mode 100644

index 00000000..47f2159b

Binary files /dev/null and b/fern/static/images/changelog/webhook-credential.png differ

diff --git a/fern/static/images/quickstart/billing/auto-reload.png b/fern/static/images/quickstart/billing/auto-reload.png

new file mode 100644

index 00000000..3b9b3d5d

Binary files /dev/null and b/fern/static/images/quickstart/billing/auto-reload.png differ

diff --git a/fern/static/images/quickstart/billing/download-invoice.png b/fern/static/images/quickstart/billing/download-invoice.png

new file mode 100644

index 00000000..78154ab5

Binary files /dev/null and b/fern/static/images/quickstart/billing/download-invoice.png differ

diff --git a/fern/static/images/quickstart/billing/invoice-detail-form.png b/fern/static/images/quickstart/billing/invoice-detail-form.png

new file mode 100644

index 00000000..6807e78e

Binary files /dev/null and b/fern/static/images/quickstart/billing/invoice-detail-form.png differ

diff --git a/fern/static/images/quickstart/billing/sample-invoice.png b/fern/static/images/quickstart/billing/sample-invoice.png

new file mode 100644

index 00000000..6dee1f88

Binary files /dev/null and b/fern/static/images/quickstart/billing/sample-invoice.png differ