diff --git a/ydb/docs/en/core/concepts/_includes/datamodel/blockdevice.md b/ydb/docs/en/core/concepts/_includes/datamodel/blockdevice.md

deleted file mode 100644

index bb4dfd5c8887..000000000000

--- a/ydb/docs/en/core/concepts/_includes/datamodel/blockdevice.md

+++ /dev/null

@@ -1,4 +0,0 @@

-## Network block storage volume {#volume}

-

-{{ ydb-short-name }} can be used as a platform for creating a wide range of data storage and processing systems, for example, by implementing a [network block device](https://en.wikipedia.org/wiki/Network_block_device) on {{ ydb-short-name }}. Network block devices implement an interface for a local block device, as well as ensure fault-tolerance (through redundancy) and good scalability in terms of volume size and the number of input/output operations per unit of time. The downside of a network block device is that any input/output operation on such device requires network interaction, which might increase the latency of the network device compared to the local device. You can deploy a common file system on a network block device and/or run an application directly on the block device, such as a database management system.

-

diff --git a/ydb/docs/en/core/concepts/_includes/datamodel/dir.md b/ydb/docs/en/core/concepts/_includes/datamodel/dir.md

deleted file mode 100644

index 05138506ef34..000000000000

--- a/ydb/docs/en/core/concepts/_includes/datamodel/dir.md

+++ /dev/null

@@ -1,4 +0,0 @@

-## Directories {#dir}

-

-For convenience, the service supports creating directories like in a file system, meaning the entire database consists of a directory tree, while tables and other entities are in the leaves of this tree (similar to files in the file system). A directory can host multiple subdirectories and tables. The names of the entities they contain are unique.

-

diff --git a/ydb/docs/en/core/concepts/_includes/datamodel/intro.md b/ydb/docs/en/core/concepts/_includes/datamodel/intro.md

deleted file mode 100644

index 860fc10178c1..000000000000

--- a/ydb/docs/en/core/concepts/_includes/datamodel/intro.md

+++ /dev/null

@@ -1,4 +0,0 @@

-# Data model and schema

-

-This section describes the entities that {{ ydb-short-name }} uses within DBs. The {{ ydb-short-name }} core lets you flexibly implement various storage primitives, so new entities may appear in the future.

-

diff --git a/ydb/docs/en/core/concepts/_includes/datamodel/pq.md b/ydb/docs/en/core/concepts/_includes/datamodel/pq.md

deleted file mode 100644

index 1e469fbc9fcf..000000000000

--- a/ydb/docs/en/core/concepts/_includes/datamodel/pq.md

+++ /dev/null

@@ -1,4 +0,0 @@

-## Persistent queue {#persistent-queue}

-

-A persistent queue consists of one or more partitions, where each partition is a [FIFO](https://en.wikipedia.org/wiki/FIFO_(computing_and_electronics)) [message queue](https://en.wikipedia.org/wiki/Message_queue) ensuring reliable delivery between two or more components. Data messages have no type and are data blobs. Partitioning is a parallel processing tool that helps ensure high queue bandwidth. Mechanisms are provided to implement the "at least once" and the "exactly once" persistent queue delivery guarantees. A persistent queue in {{ ydb-short-name }} is similar to a topic in [Apache Kafka](https://en.wikipedia.org/wiki/Apache_Kafka).

-

diff --git a/ydb/docs/en/core/concepts/_includes/index/how_it_works.md b/ydb/docs/en/core/concepts/_includes/index/how_it_works.md

index 6e367f632015..fd8ec12a0f98 100644

--- a/ydb/docs/en/core/concepts/_includes/index/how_it_works.md

+++ b/ydb/docs/en/core/concepts/_includes/index/how_it_works.md

@@ -1,22 +1,22 @@

-## How it works?

+## How It Works?

-Fully explaining how YDB works in detail takes quite a while. Below you can review several key highlights and then continue exploring documentation to learn more.

+Fully explaining how YDB works in detail takes quite a while. Below you can review several key highlights and then continue exploring the documentation to learn more.

-### {{ ydb-short-name }} architecture {#ydb-architecture}

+### {{ ydb-short-name }} Architecture {#ydb-architecture}

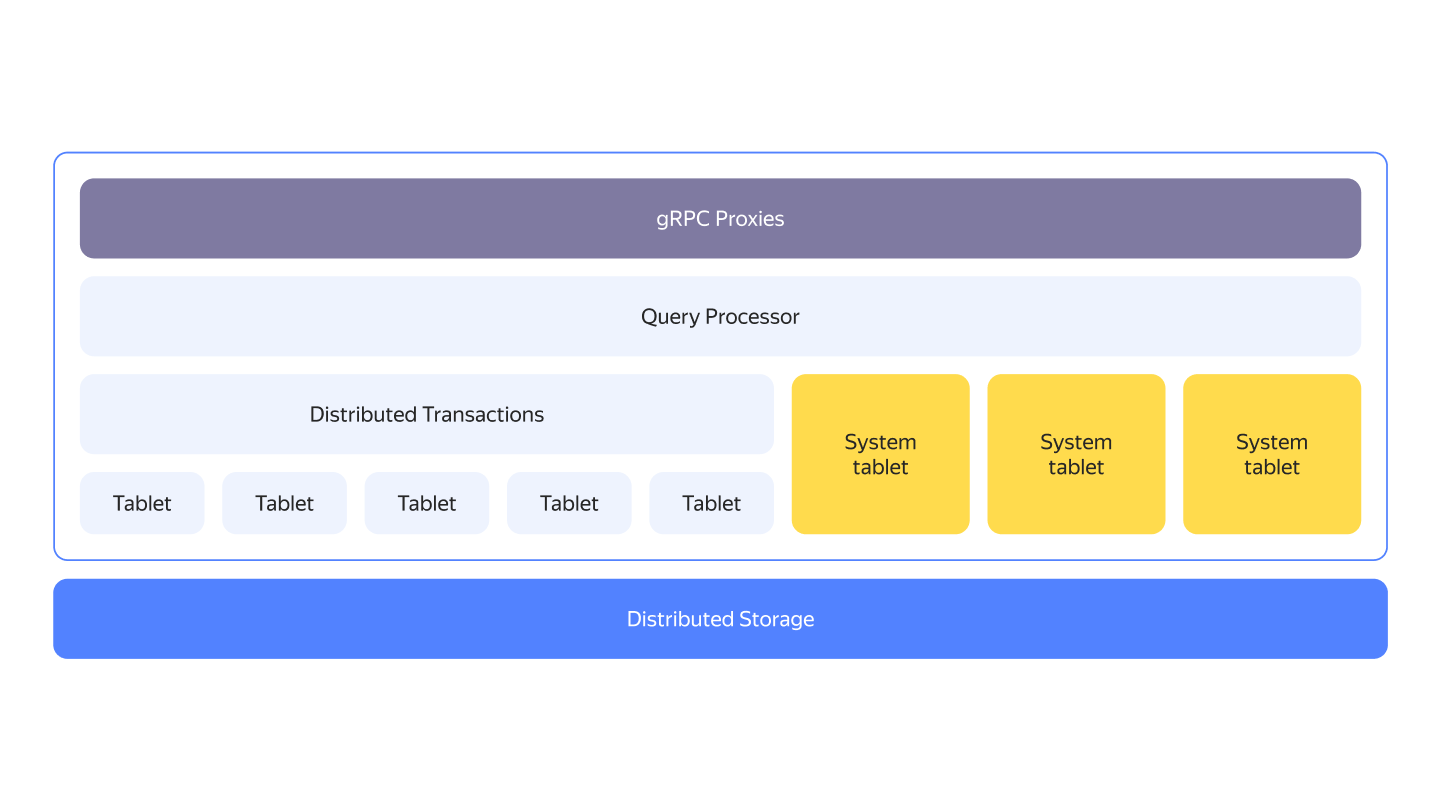

-{{ ydb-short-name }} clusters typically run on commodity hardware with shared-nothing architecture. If you look at {{ ydb-short-name }} from a bird's eye view, you'll see a layered architecture. The compute and storage layers are disaggregated, they can either run on separate sets of nodes or be co-located.

+{{ ydb-short-name }} clusters typically run on commodity hardware with a shared-nothing architecture. From a bird's eye view, {{ ydb-short-name }} exhibits a layered architecture. The compute and storage layers are disaggregated; they can either run on separate sets of nodes or be co-located.

-One of the key building blocks of {{ ydb-short-name }}'s compute layer is called a *tablet*. They are stateful logical components implementing various aspects of {{ ydb-short-name }}.

+One of the key building blocks of {{ ydb-short-name }}'s compute layer is called a *tablet*. Tablets are stateful logical components implementing various aspects of {{ ydb-short-name }}.

-The next level of detail of overall {{ ydb-short-name }} architecture is explained in the [{#T}](../../../contributor/general-schema.md) article.

+The next level of detail of the overall {{ ydb-short-name }} architecture is explained in the [{#T}](../../../contributor/general-schema.md) article.

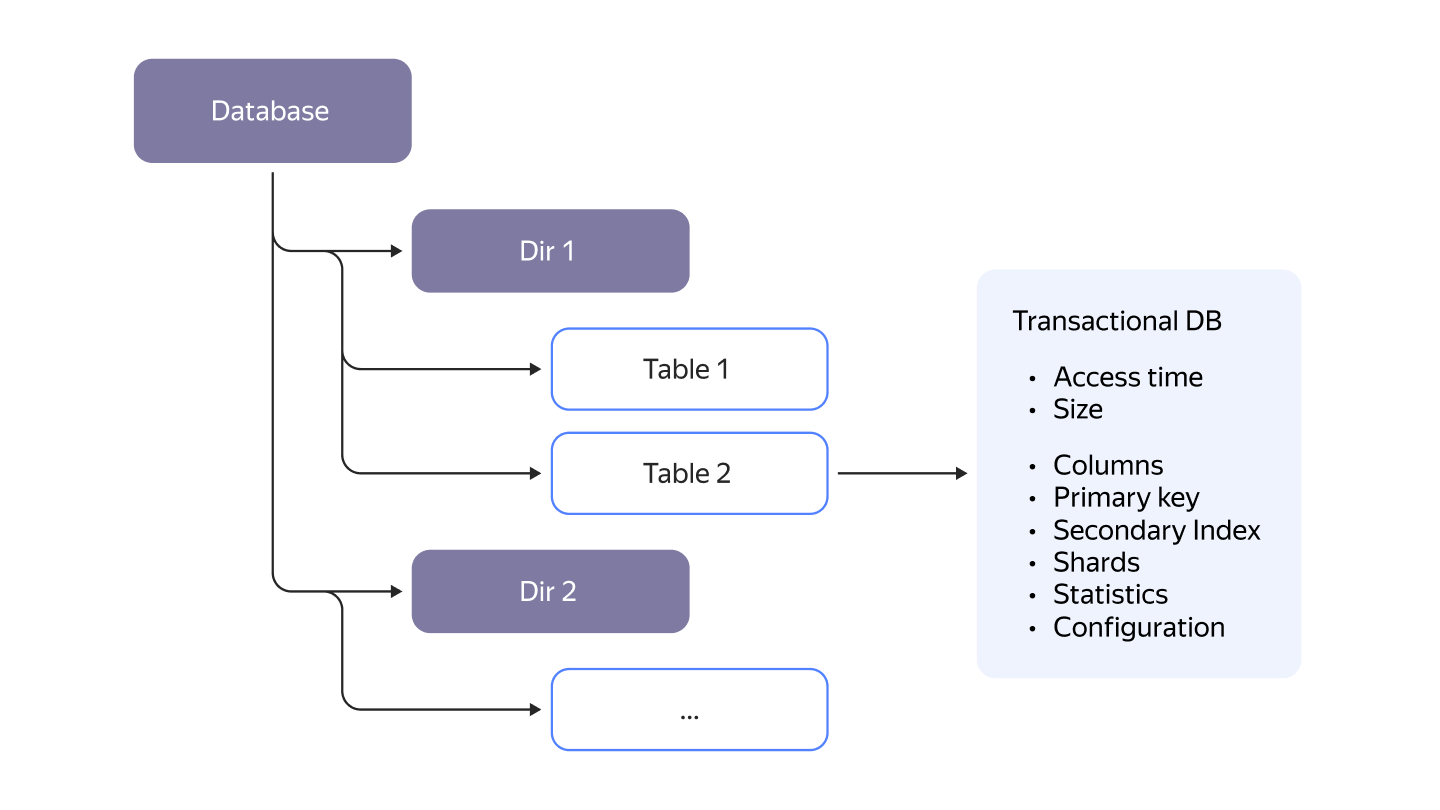

### Hierarchy {#ydb-hierarchy}

-From the user's perspective, everything inside {{ ydb-short-name }} is organized in a hierarchical structure using directories. It can have arbitrary depth depending on how you choose to organize your data and projects. Even though {{ ydb-short-name }} does not have a fixed hierarchy depth like in other SQL implementations, it will still feel familiar as this is exactly how any virtual filesystem looks like.

+From the user's perspective, everything inside {{ ydb-short-name }} is organized in a hierarchical structure using directories. It can have arbitrary depth depending on how you choose to organize your data and projects. Even though {{ ydb-short-name }} does not have a fixed hierarchy depth like in other SQL implementations, it will still feel familiar as this is exactly how any virtual filesystem looks.

### Table {#table}

@@ -27,7 +27,7 @@ From the user's perspective, everything inside {{ ydb-short-name }} is organized

* [Row-oriented tables](../../datamodel/table.md#row-tables) are designed for OLTP workloads.

* [Column-oriented tables](../../datamodel/table.md#column-tables) are designed for OLAP workloads.

-Logically, from the user’s perspective, both types of tables look the same. The main difference between row-oriented and column-oriented tables lies in how the data is physically stored. In row-oriented tables, the values of all columns in each row are stored together. In contrast, in column-oriented tables, each column is stored separately, meaning that cells from different rows are stored next to each other within the same column.

+Logically, from the user's perspective, both types of tables look the same. The main difference between row-oriented and column-oriented tables lies in how the data is physically stored. In row-oriented tables, the values of all columns in each row are stored together. In contrast, in column-oriented tables, each column is stored separately, meaning that cells from different rows are stored next to each other within the same column.

Regardless of the type, each table must have a primary key. Column-oriented tables can only have `NOT NULL` columns in primary keys. Table data is physically sorted by the primary key.

@@ -38,32 +38,32 @@ Partitioning works differently in row-oriented and column-oriented tables:

Each partition of a table is processed by a specific [tablet](../../glossary.md#tablets), called a [data shard](../../glossary.md#datashard) for row-oriented tables and a [column shard](../../glossary.md#columnshard) for column-oriented tables.

-#### Split by load {#split-by-load}

+#### Split by Load {#split-by-load}

-Data shards will automatically split into more ones as the load increases. They automatically merge back to the appropriate number when the peak load goes away.

+Data shards will automatically split into more as the load increases. They automatically merge back to the appropriate number when the peak load subsides.

-#### Split by size {#split-by-size}

+#### Split by Size {#split-by-size}

.png)

-Data shards also will automatically split when the data size increases. They automatically merge back if enough data will be deleted.

+Data shards will also automatically split when the data size increases. They automatically merge back if enough data is deleted.

-### Automatic balancing {#automatic-balancing}

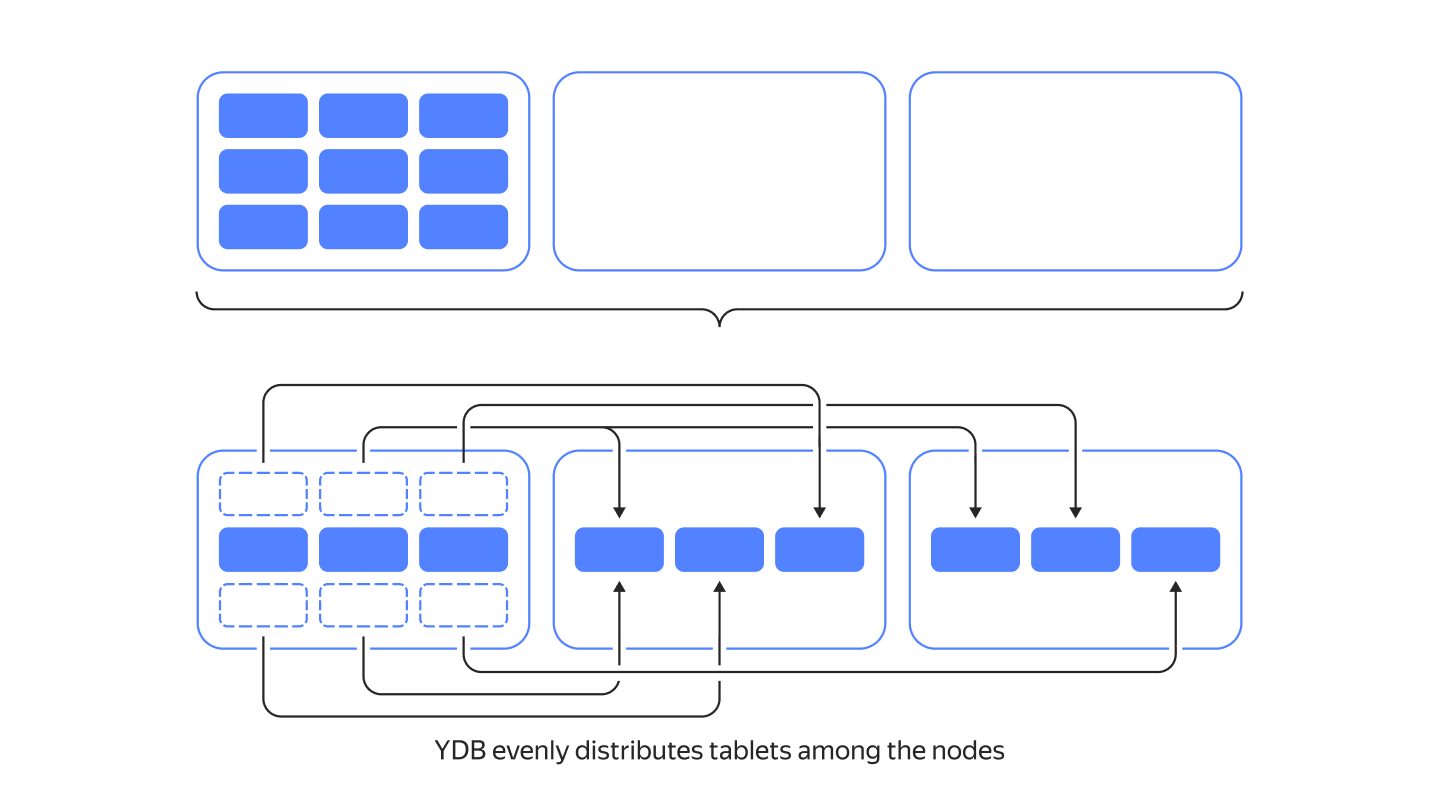

+### Automatic Balancing {#automatic-balancing}

-{{ ydb-short-name }} evenly distributes tablets among available nodes. It moves heavily loaded tablets from overloaded nodes. CPU, Memory, and Network metrics are tracked to facilitate this.

+{{ ydb-short-name }} evenly distributes tablets among available nodes. It moves heavily loaded tablets from overloaded nodes. CPU, memory, and network metrics are tracked to facilitate this.

-### Distributed Storage internals {#ds-internals}

+### Distributed Storage Internals {#ds-internals}

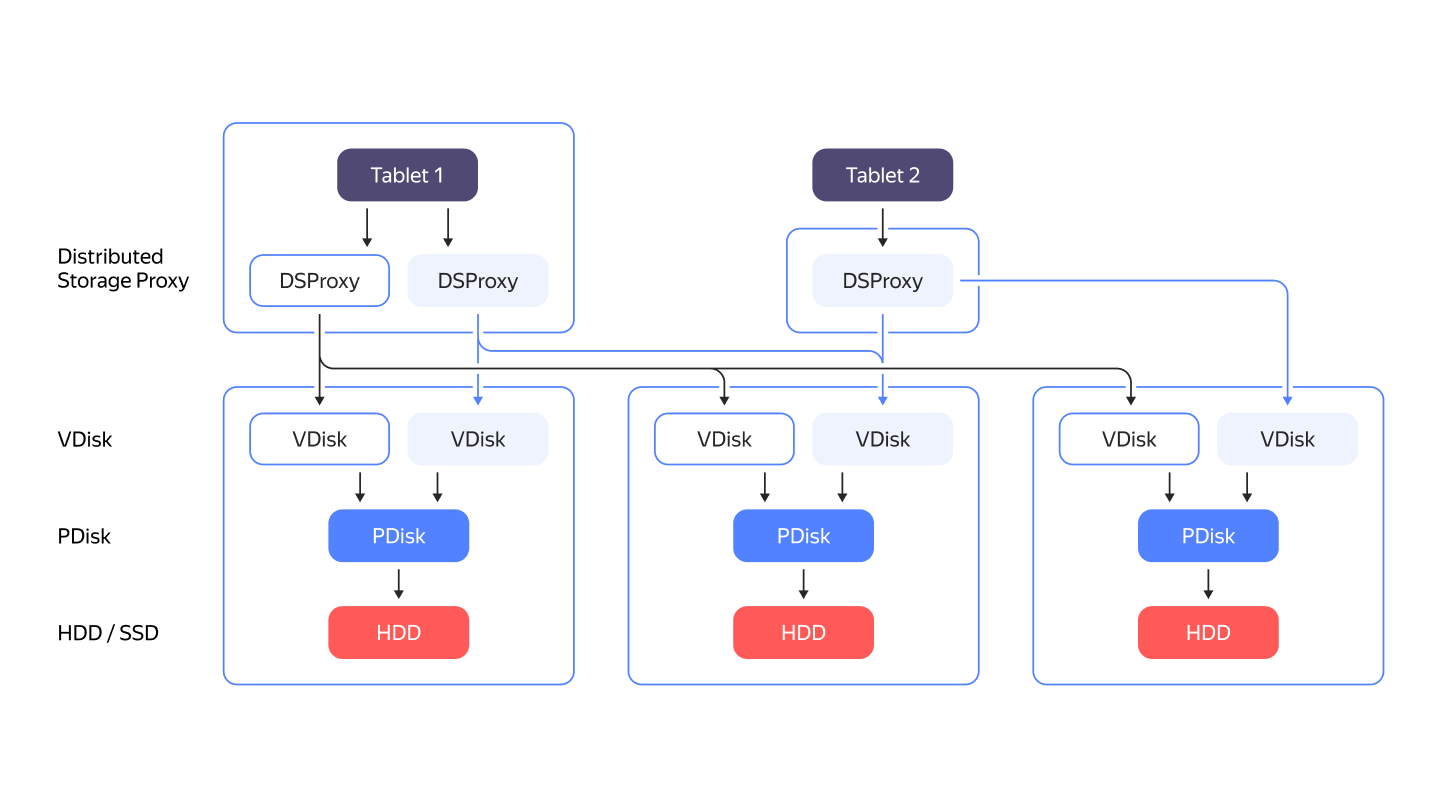

{{ ydb-short-name }} doesn't rely on any third-party filesystem. It stores data by directly working with disk drives as block devices. All major disk kinds are supported: NVMe, SSD, or HDD. The PDisk component is responsible for working with a specific block device. The abstraction layer above PDisk is called VDisk. There is a special component called DSProxy between a tablet and VDisk. DSProxy analyzes disk availability and characteristics and chooses which disks will handle a request and which won't.

-### Distributed Storage proxy (DSProxy) {#ds-proxy}

+### Distributed Storage Proxy (DSProxy) {#ds-proxy}

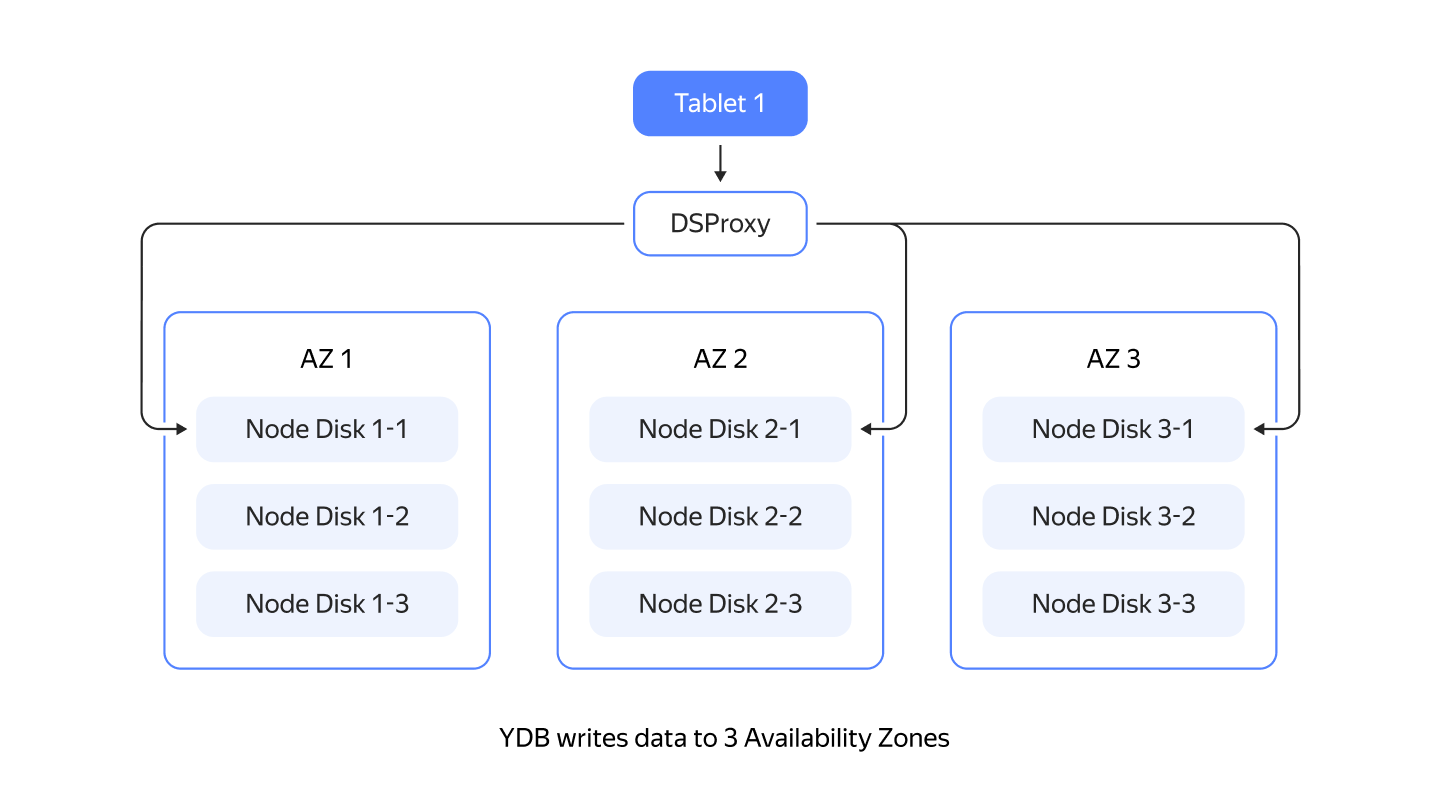

-A common fault-tolerant setup of {{ ydb-short-name }} spans 3 datacenters or availability zones (AZ). When {{ ydb-short-name }} writes data to 3 AZ, it doesn’t send requests to obviously bad disks and continues to operate without interruption even if one AZ and a disk in another AZ are lost.

\ No newline at end of file

+A common fault-tolerant setup of {{ ydb-short-name }} spans three datacenters or availability zones (AZ). When {{ ydb-short-name }} writes data to three AZs, it doesn't send requests to obviously bad disks and continues to operate without interruption even if one AZ and a disk in another AZ are lost.

\ No newline at end of file

diff --git a/ydb/docs/en/core/concepts/_includes/index/intro.md b/ydb/docs/en/core/concepts/_includes/index/intro.md

index ee7db6e9a005..cfd6d12f28fd 100644

--- a/ydb/docs/en/core/concepts/_includes/index/intro.md

+++ b/ydb/docs/en/core/concepts/_includes/index/intro.md

@@ -1,23 +1,23 @@

-# {{ ydb-short-name }} overview

+# {{ ydb-short-name }} Overview

-*{{ ydb-short-name }}* is a horizontally scalable distributed fault-tolerant DBMS. {{ ydb-short-name }} is designed for high performance with a typical server being capable of handling tens of thousands of queries per second. The system is designed to handle hundreds of petabytes of data. {{ ydb-short-name }} can operate in single data center and geo-distributed (cross data center) modes on a cluster of thousands of servers.

+*{{ ydb-short-name }}* is a horizontally scalable, distributed, fault-tolerant DBMS. It is designed for high performance, with a typical server capable of handling tens of thousands of queries per second. The system is designed to handle hundreds of petabytes of data. {{ ydb-short-name }} can operate in both single data center and geo-distributed (cross data center) modes on a cluster of thousands of servers.

{{ ydb-short-name }} provides:

-* [Strict consistency](https://en.wikipedia.org/wiki/Consistency_model#Strict_Consistency) which can be relaxed to increase performance.

-* Support for queries written in [YQL](../../../yql/reference/index.md) (an SQL dialect for working with big data).

+* [Strict consistency](https://en.wikipedia.org/wiki/Consistency_model#Strict_Consistency), which can be relaxed to increase performance.

+* Support for queries written in [YQL](../../../yql/reference/index.md), an SQL dialect for working with big data.

* Automatic data replication.

-* High availability with automatic failover in case a server, rack, or availability zone goes offline.

+* High availability with automatic failover if a server, rack, or availability zone goes offline.

* Automatic data partitioning as data or load grows.

-To interact with {{ ydb-short-name }}, you can use the [{{ ydb-short-name }} CLI](../../../reference/ydb-cli/index.md) and [SDK](../../../reference/ydb-sdk/index.md) fo C++, C#, Go, Java, Node.js, PHP, Python, and Rust.

+To interact with {{ ydb-short-name }}, you can use the [{{ ydb-short-name }} CLI](../../../reference/ydb-cli/index.md) and [SDK](../../../reference/ydb-sdk/index.md) for C++, C#, Go, Java, Node.js, PHP, Python, and Rust.

-{{ ydb-short-name }} supports a relational [data model](../../../concepts/datamodel/table.md) and manages [row-oriented](../../datamodel/table.md#row-oriented-tables) and [column-oriented](../../datamodel/table.md#column-oriented-tables) tables with a predefined schema. To make it easier to organize tables, directories can be created like in the file system. In addition to tables, {{ ydb-short-name }} supports [topics](../../topic.md) as an entity for storing unstructured messages and delivering them to multiple subscribers.

+{{ ydb-short-name }} supports a relational [data model](../../../concepts/datamodel/table.md) and manages [row-oriented](../../datamodel/table.md#row-oriented-tables) and [column-oriented](../../datamodel/table.md#column-oriented-tables) tables with a predefined schema. Directories can be created like in a file system to organize tables. In addition to tables, {{ ydb-short-name }} supports [topics](../../topic.md) for storing unstructured messages and delivering them to multiple subscribers.

-Database commands are mainly written in YQL, an SQL dialect. This gives the user a powerful and already familiar way to interact with the database.

+Database commands are mainly written in YQL, an SQL dialect, providing a powerful and familiar way to interact with the database.

-{{ ydb-short-name }} supports high-performance distributed [ACID](https://en.wikipedia.org/wiki/ACID_(computer_science)) transactions that may affect multiple records in different tables. It provides the serializable isolation level, which is the strictest transaction isolation. You can also reduce the level of isolation to raise performance.

+{{ ydb-short-name }} supports high-performance distributed [ACID](https://en.wikipedia.org/wiki/ACID_(computer_science)) transactions that may affect multiple records in different tables. It provides the serializable isolation level, the strictest transaction isolation, with the option to reduce the isolation level to enhance performance.

-{{ ydb-short-name }} natively supports different processing options, such as [OLTP](https://en.wikipedia.org/wiki/Online_transaction_processing) and [OLAP](https://en.wikipedia.org/wiki/Online_analytical_processing). The current version offers limited analytical query support. This is why we can say that {{ ydb-short-name }} is currently an OLTP database.

+{{ ydb-short-name }} natively supports different processing options, such as [OLTP](https://en.wikipedia.org/wiki/Online_transaction_processing) and [OLAP](https://en.wikipedia.org/wiki/Online_analytical_processing). The current version offers limited analytical query support, which is why {{ ydb-short-name }} is currently considered an OLTP database.

-{{ ydb-short-name }} is an open-source system. The {{ ydb-short-name }} source code is available under [Apache License 2.0](https://www.apache.org/licenses/LICENSE-2.0). Client applications interact with {{ ydb-short-name }} based on [gRPC](https://grpc.io/) that has an open specification. It allows implementing an SDK for any programming language.

+{{ ydb-short-name }} is an open-source system. The source code is available under the [Apache License 2.0](https://www.apache.org/licenses/LICENSE-2.0). Client applications interact with {{ ydb-short-name }} based on [gRPC](https://grpc.io/), which has an open specification, allowing for SDK implementation in any programming language.

diff --git a/ydb/docs/en/core/concepts/_includes/index/when_use.md b/ydb/docs/en/core/concepts/_includes/index/when_use.md

index 2c1402666f08..06ff1f3a683f 100644

--- a/ydb/docs/en/core/concepts/_includes/index/when_use.md

+++ b/ydb/docs/en/core/concepts/_includes/index/when_use.md

@@ -1,4 +1,4 @@

-## Use cases {#use-cases}

+## Use Cases {#use-cases}

{{ ydb-short-name }} can be used as an alternative solution in the following cases:

diff --git a/ydb/docs/en/core/concepts/_includes/limits-ydb.md b/ydb/docs/en/core/concepts/_includes/limits-ydb.md

index 287f32aa8017..e6ab3959b4a5 100644

--- a/ydb/docs/en/core/concepts/_includes/limits-ydb.md

+++ b/ydb/docs/en/core/concepts/_includes/limits-ydb.md

@@ -1,8 +1,8 @@

-# Database limits

+# Database Limits

This section describes the parameters of limits set in {{ ydb-short-name }}.

-## Schema object limits {#schema-object}

+## Schema Object Limits {#schema-object}

The table below shows the limits that apply to schema objects: tables, databases, and columns. The "Object" column specifies the type of schema object that the limit applies to.

The "Error type" column shows the status that the query ends with if an error occurs. For more information about statuses, see [Error handling in the API](../../reference/ydb-sdk/error_handling.md).

@@ -12,34 +12,34 @@ The "Error type" column shows the status that the query ends with if an error oc

| Database | Maximum path depth | 32 | Maximum number of nested path elements (directories, tables). | MaxDepth | SCHEME_ERROR |

| Database | Maximum number of paths (schema objects) | 10,000 | Maximum number of path elements (directories, tables) in a database. | MaxPaths | GENERIC_ERROR |

| Database | Maximum number of tablets | 200,000 | Maximum number of tablets (table shards and system tablets) that can run in the database. An error is returned if a query to create, copy, or update a table exceeds this limit. When a database reaches the maximum number of tablets, no automatic table sharding takes place. | MaxShards | GENERIC_ERROR |

-| Database | Maximum object name length | 255 | Limits the number of characters in the name of a schema object, such as a directory or a table | MaxPathElementLength | SCHEME_ERROR |

+| Database | Maximum object name length | 255 | Limits the number of characters in the name of a schema object, such as a directory or a table. | MaxPathElementLength | SCHEME_ERROR |

| Database | Maximum ACL size | 10 KB | Maximum total size of all access control rules that can be saved for the schema object in question. | MaxAclBytesSize | GENERIC_ERROR |

| Directory | Maximum number of objects | 100,000 | Maximum number of tables and child directories created in a directory. | MaxChildrenInDir | SCHEME_ERROR |

| Table | Maximum number of table shards | 35,000 | Maximum number of table shards. | MaxShardsInPath | GENERIC_ERROR |

| Table | Maximum number of columns | 200 | Limits the total number of columns in a table. | MaxTableColumns | GENERIC_ERROR |

-| Table | Maximum column name length | 255 | Limits the number of characters in a column name | MaxTableColumnNameLength | GENERIC_ERROR |

+| Table | Maximum column name length | 255 | Limits the number of characters in a column name. | MaxTableColumnNameLength | GENERIC_ERROR |

| Table | Maximum number of columns in a primary key | 20 | Each table must have a primary key. The number of columns in the primary key may not exceed this limit. | MaxTableKeyColumns | GENERIC_ERROR |

| Table | Maximum number of indexes | 20 | Maximum number of indexes other than the primary key index that can be created in a table. | MaxTableIndices | GENERIC_ERROR |

| Table | Maximum number of followers | 3 | Maximum number of read-only replicas that can be specified when creating a table with followers. | MaxFollowersCount | GENERIC_ERROR |

-| Table | Maximum number of tables to copy | 10,000 | Limit on the size of the table list for persistent table copy operations | MaxConsistentCopyTargets | GENERIC_ERROR |

+| Table | Maximum number of tables to copy | 10,000 | Limit on the size of the table list for persistent table copy operations. | MaxConsistentCopyTargets | GENERIC_ERROR |

{wide-content}

-## Size limits for stored data {#data-size}

+## Size Limits for Stored Data {#data-size}

| Parameter | Value | Error type |

| :--- | :--- | :---: |

| Maximum total size of all columns in a primary key | 1 MB | GENERIC_ERROR |

| Maximum size of a string column value | 16 MB | GENERIC_ERROR |

-## Analytical table limits

+## Analytical Table Limits

| Parameter | Value |

:--- | :---

| Maximum row size | 8 MB |

| Maximum size of an inserted data block | 8 MB |

-## Limits on query execution {#query}

+## Limits on Query Execution {#query}

The table below lists the limits that apply to query execution.

@@ -50,7 +50,7 @@ The table below lists the limits that apply to query execution.

| Maximum query text length | 10 KB | The maximum allowable length of YQL query text. | BAD_REQUEST |

| Maximum size of parameter values | 50 MB | The maximum total size of parameters passed when executing a previously prepared query. | BAD_REQUEST |

-{% cut "Legacy limits" %}

+{% cut "Legacy Limits" %}

In previous versions of {{ ydb-short-name }}, queries were typically executed using an API called "Table Service". This API had the following limitations, which have been addressed by replacing it with a new API called "Query Service".

@@ -61,7 +61,7 @@ In previous versions of {{ ydb-short-name }}, queries were typically executed us

{% endcut %}

-## Topic limits {#topic}

+## Topic Limits {#topic}

| Parameter | Value |

| :--- | :--- |

diff --git a/ydb/docs/en/core/concepts/_includes/scan_query.md b/ydb/docs/en/core/concepts/_includes/scan_query.md

index 018e5b527b49..3dfc775fad5c 100644

--- a/ydb/docs/en/core/concepts/_includes/scan_query.md

+++ b/ydb/docs/en/core/concepts/_includes/scan_query.md

@@ -1,4 +1,4 @@

-# Scan queries in {{ ydb-short-name }}

+# Scan Queries in {{ ydb-short-name }}

Scan Queries is a separate data access interface designed primarily for running analytical ad hoc queries against a DB.

@@ -6,7 +6,7 @@ This method of executing queries has the following unique features:

* Only *Read-Only* queries.

* In `SERIALIZABLE_RW` mode, a data snapshot is taken and then used for all subsequent operations. As a result, the impact on OLTP transactions is minimal (only taking a snapshot).

-* The output of a query is a data stream ([grpc stream](https://grpc.io/docs/what-is-grpc/core-concepts/)). This means scan queries have no limit on the number of rows in the result.

+* The output of a query is a data stream ([gRPC stream](https://grpc.io/docs/what-is-grpc/core-concepts/)). This means scan queries have no limit on the number of rows in the result.

* Due to the high overhead, it is only suitable for ad hoc queries.

{% note info %}

@@ -19,7 +19,7 @@ Scan queries cannot currently be considered an effective solution for running OL

* The query duration is limited to 5 minutes.

* Many operations (including sorting) are performed entirely in memory, which may lead to resource shortage errors when running complex queries.

-* A single strategy is currently in use for joins: *MapJoin* (a.k.a. *Broadcast Join*) where the "right" table is converted to a map; and therefore, must be no more than single gigabytes in size.

+* A single strategy is currently in use for joins: *MapJoin* (a.k.a. *Broadcast Join*) where the "right" table is converted to a map; and therefore, must be no more than a few gigabytes in size.

* Prepared form isn't supported, so for each call, a query is compiled.

* There is no optimization for point reads or reading small ranges of data.

* The SDK doesn't support automatic retry.

@@ -31,7 +31,7 @@ Despite the fact that *Scan Queries* obviously don't interfere with the executio

{% endnote %}

-## How do I use it? {#how-use}

+## How Do I Use It? {#how-use}

Like other types of queries, *Scan Queries* are available via the {% if link-console-main %}[management console]({{ link-console-main }}) (the query must specify `PRAGMA Kikimr.ScanQuery = "true";`),{% endif %} [CLI](../../reference/ydb-cli/commands/scan-query.md), and [SDK](../../reference/ydb-sdk/index.md).

@@ -39,7 +39,7 @@ Like other types of queries, *Scan Queries* are available via the {% if link-con

### C++ SDK {#cpp}

-To run a query using *Scan Queries*, use 2 methods from the `Ydb::TTableClient` class:

+To run a query using *Scan Queries*, use two methods from the `Ydb::TTableClient` class:

```cpp

class TTableClient {

diff --git a/ydb/docs/en/core/concepts/_includes/secondary_indexes.md b/ydb/docs/en/core/concepts/_includes/secondary_indexes.md

index 4419e61f1ea7..33df27804294 100644

--- a/ydb/docs/en/core/concepts/_includes/secondary_indexes.md

+++ b/ydb/docs/en/core/concepts/_includes/secondary_indexes.md

@@ -1,4 +1,4 @@

-# Secondary indexes

+# Secondary Indexes

{{ ydb-short-name }} automatically creates a primary key index, which is why selection by primary key is always efficient, affecting only the rows needed. Selections by criteria applied to one or more non-key columns typically result in a full table scan. To make these selections efficient, use _secondary indexes_.

@@ -9,35 +9,35 @@ The current version of {{ ydb-short-name }} implements _synchronous_ and _asynch

When a user sends an SQL query to insert, modify, or delete data, the database transparently generates commands to modify the index table. A table may have multiple secondary indexes. An index may include multiple columns, and the sequence of columns in an index matters. A single column may be included in multiple indexes. In addition to the specified columns, every index implicitly stores the table primary key columns to enable navigation from an index record to the table row.

-## Synchronous secondary index {#sync}

+## Synchronous Secondary Index {#sync}

A synchronous index is updated simultaneously with the table that it indexes. This index ensures [strict consistency](https://en.wikipedia.org/wiki/Consistency_model) through [distributed transactions](../transactions.md#distributed-tx). While reads and blind writes to a table with no index can be performed without a planning stage, significantly reducing delays, such optimization is impossible when writing data to a table with a synchronous index.

-## Asynchronous secondary index {#async}

+## Asynchronous Secondary Index {#async}

Unlike a synchronous index, an asynchronous index doesn't use distributed transactions. Instead, it receives changes from an indexed table in the background. Write transactions to a table using this index are performed with no planning overheads due to reduced guarantees: an asynchronous index provides [eventual consistency](https://en.wikipedia.org/wiki/Eventual_consistency), but no strict consistency. You can only use asynchronous indexes in read transactions in [Stale Read Only](transactions.md#modes) mode.

-## Covering secondary index {#covering}

+## Covering Secondary Index {#covering}

You can copy the contents of columns into a covering index. This eliminates the need to read data from the main table when performing reads by index and significantly reduces delays. At the same time, such denormalization leads to increased usage of disk space and may slow down inserts and updates due to the need for additional data copying.

-## Unique secondary index {#unique}

+## Unique Secondary Index {#unique}

This type of index enforces unique constraint behavior and, like other indexes, allows efficient point lookup queries. {{ ydb-short-name }} uses it to perform additional checks, ensuring that each distinct value in the indexed column set appears in the table no more than once. If a modifying query violates the constraint, it will be aborted with a `PRECONDITION_FAILED` status. Therefore, client code must be prepared to handle this status.

-A unique secondary index is a synchronous index, so the update process is the same as in the [Synchronous secondary index](#sync) section described above from a transaction perspective.

+A unique secondary index is a synchronous index, so the update process is the same as in the [Synchronous Secondary Index](#sync) section described above from a transaction perspective.

### Limitations

Currently, a unique index cannot be added to an existing table.

-## Vector index

+## Vector Index

[Vector Index](vector_indexes.md) is a special type of secondary index.

Unlike secondary indexes, which optimize equality or range searches, vector indexes allow similarity searches based on distance or similarity functions.

-## Creating a secondary index online {#index-add}

+### Creating a Secondary Index Online {#index-add}

{{ ydb-short-name }} lets you create new and delete existing secondary indexes without stopping the service. For a single table, you can only create one index at a time.

@@ -45,15 +45,15 @@ Online index creation consists of the following steps:

1. Taking a snapshot of a data table and creating an index table marked that writes are available.

- After this step, write transactions are distributed, writing to the main table and the index, respectively. The index is not yet available to the user.

+ After this step, write transactions are distributed, writing to the main table and the index, respectively. The index is not yet available to the user.

1. Reading the snapshot of the main table and writing data to the index.

- "Writes to the past" are implemented: situations where data updates in step 1 change the data written in step 2 are resolved.

+ "Writes to the past" are implemented: situations where data updates in step 1 change the data written in step 2 are resolved.

1. Publishing the results and deleting the snapshot.

- The index is ready to use.

+ The index is ready to use.

Possible impact on user transactions:

@@ -64,7 +64,7 @@ The rate of data writes is selected to minimize their impact on user transaction

Creating an index is an asynchronous operation. If the client-server connection is interrupted after the operation has started, index building continues. You can manage asynchronous operations using the {{ ydb-short-name }} CLI.

-## Creating and deleting secondary indexes {#ddl}

+## Creating and Deleting Secondary Indexes {#ddl}

A secondary index can be:

@@ -73,6 +73,6 @@ A secondary index can be:

- Deleted from an existing table with the YQL [`ALTER TABLE`](../../yql/reference/syntax/alter_table/index.md) statement or the YDB CLI [`table index drop`](../../reference/ydb-cli/commands/secondary_index.md#drop) command.

- Deleted together with the table using the YQL [`DROP TABLE`](../../yql/reference/syntax/drop_table.md) statement or the YDB CLI `table drop` command.

-## Using secondary indexes {#use}

+## Using Secondary Indexes {#use}

For detailed information on using secondary indexes in applications, refer to the [relevant article](../../dev/secondary-indexes.md) in the documentation section for developers.

diff --git a/ydb/docs/en/core/concepts/_includes/transactions.md b/ydb/docs/en/core/concepts/_includes/transactions.md

index 1e1d08830b3b..084cc3d919ae 100644

--- a/ydb/docs/en/core/concepts/_includes/transactions.md

+++ b/ydb/docs/en/core/concepts/_includes/transactions.md

@@ -1,12 +1,12 @@

-# {{ ydb-short-name }} transactions and queries

+# {{ ydb-short-name }} Transactions and Queries

This section describes the specifics of YQL implementation for {{ ydb-short-name }} transactions.

-## Query language {#query-language}

+## Query Language {#query-language}

The main tool for creating, modifying, and managing data in {{ ydb-short-name }} is a declarative query language called YQL. YQL is an SQL dialect that can be considered a database interaction standard. {{ ydb-short-name }} also supports a set of special RPCs useful in managing a tree schema or a cluster, for instance.

-## Transaction modes {#modes}

+## Transaction Modes {#modes}

By default, {{ ydb-short-name }} transactions are executed in *Serializable* mode. It provides the strictest [isolation level](https://en.wikipedia.org/wiki/Isolation_(database_systems)#Serializable) for custom transactions. This mode guarantees that the result of successful parallel transactions is equivalent to their serial execution, and there are no [read anomalies](https://en.wikipedia.org/wiki/Isolation_(database_systems)#Read_phenomena) for successful transactions.

@@ -22,7 +22,7 @@ If consistency or freshness requirement for data read by a transaction can be re

The transaction execution mode is specified in its settings when creating the transaction. See the examples for the {{ ydb-short-name }} SDK in the [{#T}](../../recipes/ydb-sdk/tx-control.md).

-## YQL language {#language-yql}

+## YQL Language {#language-yql}

Statements implemented in YQL can be divided into two classes: [Data Definition Language (DDL)](https://en.wikipedia.org/wiki/Data_definition_language) and [Data Manipulation Language (DML)](https://en.wikipedia.org/wiki/Data_manipulation_language).

@@ -31,7 +31,7 @@ For more information about supported YQL constructs, see the [YQL documentation]

Listed below are the features and limitations of YQL support in {{ ydb-short-name }}, which might not be obvious at first glance and are worth noting:

* Multi-statement transactions (transactions made up of a sequence of YQL statements) are supported. Transactions may interact with client software, or in other words, client interactions with the database might look as follows: `BEGIN; make a SELECT; analyze the SELECT results on the client side; ...; make an UPDATE; COMMIT`. We should note that if the transaction body is fully formed before accessing the database, it will be processed more efficiently.

-* {{ ydb-short-name }} does not support transactions that combine DDL and DML queries. The conventional [ACID]{% if lang == "en" %}(https://en.wikipedia.org/wiki/ACID){% endif %}{% if lang == "ru" %}(https://ru.wikipedia.org/wiki/ACID){% endif %} notion of a transactions is applicable specifically to DML queries, that is, queries that change data. DDL queries must be idempotent, meaning repeatable if an error occurs. If you need to manipulate a schema, each manipulation is transactional, while a set of manipulations is not.

+* {{ ydb-short-name }} does not support transactions that combine DDL and DML queries. The conventional [ACID](https://en.wikipedia.org/wiki/ACID) notion of a transaction is applicable specifically to DML queries, that is, queries that change data. DDL queries must be idempotent, meaning repeatable if an error occurs. If you need to manipulate a schema, each manipulation is transactional, while a set of manipulations is not.

* YQL implementation used in {{ ydb-short-name }} employs the [Optimistic Concurrency Control](https://en.wikipedia.org/wiki/Optimistic_concurrency_control) mechanism. If an entity is affected during a transaction, optimistic blocking is applied. When the transaction is complete, the mechanism verifies that the locks have not been invalidated. For the user, locking optimism means that when transactions are competing with one another, the one that finishes first wins. Competing transactions fail with the `Transaction locks invalidated` error.

* All changes made during the transaction accumulate in the database server memory and are applied when the transaction completes. If the locks are not invalidated, all the changes accumulated are committed atomically; if at least one lock is invalidated, none of the changes are committed. The above model involves certain restrictions: changes made by a single transaction must fit inside the available memory.

@@ -47,7 +47,7 @@ For efficient execution, a transaction should be formed so that the first part o

For more information about YQL support in {{ ydb-short-name }}, see the [YQL documentation](../../yql/reference/index.md).

-## Distributed transactions {#distributed-tx}

+## Distributed Transactions {#distributed-tx}

A database [table](../datamodel/table.md) in {{ ydb-short-name }} can be sharded by the range of the primary key values. Different table shards can be served by different distributed database servers (including ones in different locations). They can also move independently between servers to enable rebalancing or ensure shard operability if servers or network equipment goes offline.

@@ -55,7 +55,7 @@ A [topic](../topic.md) in {{ ydb-short-name }} can be sharded into several parti

{{ ydb-short-name }} supports distributed transactions. Distributed transactions are transactions that affect more than one shard of one or more tables and topics. They require more resources and take more time. While point reads and writes may take up to 10 ms in the 99th percentile, distributed transactions typically take from 20 to 500 ms.

-## Transactions with topics and tables {#topic-table-transactions}

+## Transactions with Topics and Tables {#topic-table-transactions}

{{ ydb-short-name }} supports transactions involving [row-oriented tables](../glossary.md#row-oriented-table) and/or [topics](../glossary.md#topic). This makes it possible to transactionally transfer data from tables to topics and vice versa, as well as between topics. This ensures that data is neither lost nor duplicated in case of a network outage or other issues. This enables the implementation of the transactional outbox pattern within {{ ydb-short-name }}.

diff --git a/ydb/docs/en/core/concepts/_includes/ttl.md b/ydb/docs/en/core/concepts/_includes/ttl.md

index 63cc21908fd8..bed7ae216836 100644

--- a/ydb/docs/en/core/concepts/_includes/ttl.md

+++ b/ydb/docs/en/core/concepts/_includes/ttl.md

@@ -1,8 +1,8 @@

-# Time to Live (TTL) and eviction to external storage

+# Time to Live (TTL) and Eviction to External Storage

This section describes how the TTL mechanism works and what its limits are.

-## How it works {#how-it-works}

+## How It Works {#how-it-works}

The table's TTL is a sequence of storage tiers. Each tier contains an expression (TTL expression) and an action. When the expression is triggered, that tier is assigned to the row. When a tier is assigned to a row, the specified action is automatically performed: moving the row to external storage or deleting it. External storage is represented by the [external data source](../datamodel/external_data_source.md) object.

@@ -46,7 +46,7 @@ The *BRO* has the following properties:

* `Uint64`.

* `DyNumber`.

-* The value in the TTL column with a numeric type (`Uint32`, `Uint64`, or `DyNumber`) is interpreted as a [Unix time]{% if lang == "en" %}(https://en.wikipedia.org/wiki/Unix_time){% endif %}{% if lang == "ru" %}(https://ru.wikipedia.org/wiki/Unix-время){% endif %} value. The following units are supported (set in the TTL settings):

+* The value in the TTL column with a numeric type (`Uint32`, `Uint64`, or `DyNumber`) is interpreted as a [Unix time](https://en.wikipedia.org/wiki/Unix_time) value. The following units are supported (set in the TTL settings):

* Seconds.

* Milliseconds.

diff --git a/ydb/docs/en/core/concepts/async-replication.md b/ydb/docs/en/core/concepts/async-replication.md

index 512ef03b7434..7149783bdbf2 100644

--- a/ydb/docs/en/core/concepts/async-replication.md

+++ b/ydb/docs/en/core/concepts/async-replication.md

@@ -1,4 +1,4 @@

-# Asynchronous replication

+# Asynchronous Replication

Asynchronous replication allows for synchronizing data between {{ ydb-short-name }} [databases](glossary.md#database) in near real time. It can also be used for data migration between databases with minimal downtime for applications interacting with these databases. Such databases can be located in the same {{ ydb-short-name }} [cluster](glossary.md#cluster) as well as in different clusters.

@@ -66,13 +66,13 @@ Replicas are created under the user account that was used to create the asynchro

{% endnote %}

-### Initial table scan {#initial-scan}

+### Initial Table Scan {#initial-scan}

During the [initial table scan](cdc.md#initial-scan) the source data is exported to changefeeds. The target runs [consumers](topic.md#consumer) that read the source data from the changefeeds and write it to replicas.

You can get the progress of the initial table scan from the [description](../reference/ydb-cli/commands/scheme-describe.md) of the asynchronous replication instance.

-### Change data replication {#replication-of-changes}

+### Change Data Replication {#replication-of-changes}

After the initial table scan is completed, the consumers read the change data and write it to replicas.

@@ -97,7 +97,7 @@ You can also get the replication lag from the [description](../reference/ydb-cli

* During asynchronous replication, you cannot [add or delete columns](../yql/reference/syntax/alter_table/columns.md) in the source tables.

* During asynchronous replication, replicas are available only for reading.

-## Error handling during asynchronous replication {#error-handling}

+## Error Handling During Asynchronous Replication {#error-handling}

Possible errors during asynchronous replication can be grouped into the following classes:

@@ -110,9 +110,9 @@ Currently, asynchronous replication that is aborted due to a critical error cann

{% endnote %}

-For more information about error classes and how to address them, refer to [Error handling](../reference/ydb-sdk/error_handling.md).

+For more information about error classes and how to address them, refer to [Error Handling](../reference/ydb-sdk/error_handling.md).

-## Asynchronous replication completion {#done}

+## Asynchronous Replication Completion {#done}

Completion of asynchronous replication might be an end goal of data migration from one database to another. In this case the client stops writing data to the source, waits for the zero replication lag, and completes replication. After the replication process is completed, replicas become available both for reading and writing. Then you can switch the load from the source database to the target database and complete data migration.

@@ -130,7 +130,7 @@ You cannot resume completed asynchronous replication.

To complete asynchronous replication, use the [ALTER ASYNC REPLICATION](../yql/reference/syntax/alter-async-replication.md) YQL expression.

-## Dropping an asynchronous replication instance {#drop}

+## Dropping an Asynchronous Replication Instance {#drop}

When you drop an asynchronous replication instance:

diff --git a/ydb/docs/en/core/concepts/cdc.md b/ydb/docs/en/core/concepts/cdc.md

index d41cdb3ca96e..d98f453df0fd 100644

--- a/ydb/docs/en/core/concepts/cdc.md

+++ b/ydb/docs/en/core/concepts/cdc.md

@@ -11,7 +11,7 @@ When adding, updating, or deleting a table row, CDC generates a change record by

* Change records are sharded across topic partitions by primary key.

* Each change is only delivered once (exactly-once delivery).

* Changes by the same primary key are delivered to the same topic partition in the order they took place in the table.

-* Change record is delivered to the topic partition only after the corresponding transaction in the table has been committed.

+* Change records are delivered to the topic partition only after the corresponding transaction in the table has been committed.

## Limitations {#restrictions}

@@ -23,14 +23,14 @@ When adding, updating, or deleting a table row, CDC generates a change record by

Adding rows is a special update case, and a record of adding a row in a changefeed will look similar to an update record.

-## Virtual timestamps {#virtual-timestamps}

+## Virtual Timestamps {#virtual-timestamps}

All changes in {{ ydb-short-name }} tables are arranged according to the order in which transactions are performed. Each change is marked with a virtual timestamp which consists of two elements:

1. Global coordinator time.

-1. Unique transaction ID.

+2. Unique transaction ID.

-Using these stamps, you can arrange records from different partitions of the topic relative to each other or use them for filtering (for example, to exclude old change records).

+Using these timestamps, you can arrange records from different partitions of the topic relative to each other or use them for filtering (for example, to exclude old change records).

{% note info %}

@@ -38,13 +38,13 @@ By default, virtual timestamps are not uploaded to the changefeed. To enable the

{% endnote %}

-## Initial table scan {#initial-scan}

+## Initial Table Scan {#initial-scan}

By default, a changefeed only includes records about those table rows that changed after the changefeed was created. Initial table scan enables you to export, to the changefeed, the values of all the rows that existed at the time of changefeed creation.

The scan runs in the background mode on top of the table snapshot. The following situations are possible:

-* A non-scanned row changes in the table. The changefeed will receive, one after another: a record with the source value and a record about the update. When the same record is changed again, only the update record is exported.

+* A non-scanned row changes in the table. The changefeed will receive, one after another: a record with the source value and a record about the update. When the same record is changed again, only the update record is exported.

* A changed row is found during scanning. Nothing is exported to the changefeed because the source value has already been exported at the time of change (see the previous paragraph).

* A scanned row changes in the table. Only an update record exports to the changefeed.

@@ -64,11 +64,11 @@ During the scanning process, depending on the table update frequency, you might

{% endnote %}

-## Record structure {#record-structure}

+## Record Structure {#record-structure}

Depending on the [changefeed parameters](../yql/reference/syntax/alter_table/changefeed.md), the structure of a record may differ.

-### JSON format {#json-record-structure}

+### JSON Format {#json-record-structure}

A [JSON](https://en.wikipedia.org/wiki/JSON) record has the following structure:

@@ -154,7 +154,7 @@ Record with virtual timestamps:

{% if audience == "tech" %}

-### Amazon DynamoDB-compatible JSON format {#dynamodb-streams-json-record-structure}

+### Amazon DynamoDB-Compatible JSON Format {#dynamodb-streams-json-record-structure}

For [Amazon DynamoDB](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/Introduction.html)-compatible document tables, {{ ydb-short-name }} can generate change records in the [Amazon DynamoDB Streams](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/Streams.html)-compatible format.

@@ -169,7 +169,7 @@ The record structure is the same as for [Amazon DynamoDB Streams](https://docs.a

{% endif %}

-### Debezium-compatible JSON format {#debezium-json-record-structure}

+### Debezium-Compatible JSON Format {#debezium-json-record-structure}

A [Debezium](https://debezium.io)-compatible JSON record structure has the following format:

@@ -219,7 +219,7 @@ When reading using Kafka API, the Debezium-compatible primary key of the modifie

* `payload`: Key of a row that was changed. Contains names and values of the columns that are components of the primary key.

-## Record retention period {#retention-period}

+## Record Retention Period {#retention-period}

By default, records are stored in the changefeed for 24 hours from the time they are sent. Depending on usage scenarios, the retention period can be reduced or increased up to 30 days.

@@ -233,9 +233,9 @@ Deleting records before they are processed by the client will cause [offset](top

To set up the record retention period, specify the [RETENTION_PERIOD](../yql/reference/syntax/alter_table/changefeed.md) parameter when creating a changefeed.

-## Topic partitions {#topic-partitions}

+## Topic Partitions {#topic-partitions}

-By default, the number of [topic partitions](topic.md#partitioning) is equal to the number of table partitions. The number of topic partitions can be redefined by specifying [TOPIC_MIN_ACTIVE_PARTITIONS](../yql/reference/syntax/alter_table/changefeed.md) parameter when creating a changefeed.

+By default, the number of [topic partitions](topic.md#partitioning) is equal to the number of table partitions. The number of topic partitions can be redefined by specifying the [TOPIC_MIN_ACTIVE_PARTITIONS](../yql/reference/syntax/alter_table/changefeed.md) parameter when creating a changefeed.

{% note info %}

@@ -243,10 +243,10 @@ Currently, the ability to explicitly specify the number of topic partitions is a

{% endnote %}

-## Creating and deleting a changefeed {#ddl}

+## Creating and Deleting a Changefeed {#ddl}

You can add a changefeed to an existing table or erase it using the [ADD CHANGEFEED and DROP CHANGEFEED](../yql/reference/syntax/alter_table/changefeed.md) directives of the YQL `ALTER TABLE` statement. When erasing a table, the changefeed added to it is also deleted.

-## CDC purpose and use {#best_practices}

+## CDC Purpose and Use {#best_practices}

For information about using CDC when developing apps, see [best practices](../dev/cdc.md).

diff --git a/ydb/docs/en/core/concepts/column-table.md b/ydb/docs/en/core/concepts/column-table.md

index 926388448c95..43198502c55a 100644

--- a/ydb/docs/en/core/concepts/column-table.md

+++ b/ydb/docs/en/core/concepts/column-table.md

@@ -1,4 +1,4 @@

-# Сolumn-oriented table

+# Column-Oriented Table

{% note warning %}

@@ -6,7 +6,7 @@ Column-oriented {{ ydb-short-name }} tables are in the Preview mode.

{% endnote %}

-A column-oriented table in {{ ydb-short-name }} is a relational table containing a set of related data and made up of rows and columns. Unlike regular [row-oriented {{ ydb-short-name }} tables](#table) designed for [OLTP loads](https://ru.wikipedia.org/wiki/OLTP), column-oriented tables are optimized for data analytics and [OLAP loads](https://ru.wikipedia.org/wiki/OLAP).

+A column-oriented table in {{ ydb-short-name }} is a relational table containing a set of related data and made up of rows and columns. Unlike regular [row-oriented {{ ydb-short-name }} tables](#table) designed for [OLTP loads](https://en.wikipedia.org/wiki/OLTP), column-oriented tables are optimized for data analytics and [OLAP loads](https://en.wikipedia.org/wiki/OLAP).

The current primary use case for column-oriented tables is writing data with the increasing primary key, for example, event time, analyzing this data, and deleting expired data based on TTL. The optimal method of inserting data to column-oriented tables is batch writing in blocks of several megabytes.

@@ -15,7 +15,7 @@ The data batches are inserted atomically: the data will be written either to all

In most cases, working with column-oriented {{ ydb-short-name }} tables is similar to row-oriented tables. However, there are the following distinctions:

* You can only use NOT NULL columns as your key columns.

-* Data is not partitioned by the primary key but by the hash from the [partitioning columns](#olap-tables-partitioning).

+* Data is not partitioned by the primary key but by the hash from the [partitioning columns](#olap-tables-partitioning).

* A [limited set](#olap-data-types) of data types is supported.

What's currently not supported:

@@ -27,11 +27,11 @@ What's currently not supported:

* Change Data Capture

* Renaming tables

* Custom attributes in tables

-* Updating data column lists in column-oriented tables

+* Updating data column lists in column-oriented tables

* Adding data to column-oriented tables by the SQL `INSERT` operator

-* Deleting data from column-oriented tables using the SQL `DELETE` operator The data is actually deleted on TTL expiry.

+* Deleting data from column-oriented tables using the SQL `DELETE` operator. The data is actually deleted on TTL expiry.

-## Supported data types {#olap-data-types}

+## Supported Data Types {#olap-data-types}

| Data type | Can be used in

column-oriented tables | Can be used

as primary key |

---|---|---

@@ -64,7 +64,7 @@ Learn more in [{#T}](../yql/reference/types/index.md).

Unlike row-oriented {{ ydb-short-name }} tables, you cannot partition column-oriented tables by primary keys but only by specially designated partitioning keys. Partitioning keys constitute a subset of the table's primary keys.

-Unlike data partitioning in row-oriented {{ ydb-short-name }} tables, key values are not used to partition data in column-oriented tables. Hash values from keys are used instead. This way, you can uniformly distribute data across all your existing partitions. This kind of partitioning enables you to avoid hotspots at data insert, streamlining analytical queries that process (that is, read) large data amounts.

+Unlike data partitioning in row-oriented {{ ydb-short-name }} tables, key values are not used to partition data in column-oriented tables. Hash values from keys are used instead. This way, you can uniformly distribute data across all your existing partitions. This kind of partitioning enables you to avoid hotspots at data insert, streamlining analytical queries that process (that is, read) large data amounts.

How you select partitioning keys substantially affects the performance of your column-oriented tables. Learn more in [{#T}](../best_practices/pk-olap-scalability.md).

@@ -77,6 +77,6 @@ To manage data partitioning, use the `AUTO_PARTITIONING_MIN_PARTITIONS_COUNT` ad

Because it ignores all the other partitioning parameters, the system uses the same value as the upper partition limit.

-## See also {#see-also}

+## See Also {#see-also}

* [{#T}](../yql/reference/syntax/create_table.md#olap-tables)

diff --git a/ydb/docs/en/core/concepts/connect.md b/ydb/docs/en/core/concepts/connect.md

index b62c09cee8aa..05de01df45b3 100644

--- a/ydb/docs/en/core/concepts/connect.md

+++ b/ydb/docs/en/core/concepts/connect.md

@@ -1,10 +1,10 @@

-# Connecting to a database

+# Connecting to a Database

To connect to a {{ ydb-short-name }} database from the {{ ydb-short-name }} CLI or an app running the {{ ydb-short-name }} SDK, specify your [endpoint](#endpoint) and [database path](#database).

## Endpoint {#endpoint}

-An endpoint is a string structured as `protocol://host:port` and provided by a {{ ydb-short-name }} cluster owner for proper routing of client queries to its databases by way of a network infrastructure as well as for proper network connections. Cloud databases display the endpoint in the management console on the requisite DB page and also normally send it via the cloud provider's CLI. In corporate environments, endpoint names {{ ydb-short-name }} are provided by the administration team or obtained in the internal cloud management console.

+An endpoint is a string structured as `protocol://host:port` and provided by a {{ ydb-short-name }} cluster owner for proper routing of client queries to its databases by way of a network infrastructure as well as for proper network connections. Cloud databases display the endpoint in the management console on the requisite DB page and also normally send it via the cloud provider's CLI. In corporate environments, {{ ydb-short-name }} endpoint names are provided by the administration team or obtained in the internal cloud management console.

{% include [overlay/endpoint.md](_includes/connect_overlay/endpoint.md) %}

@@ -14,9 +14,9 @@ Examples:

* `grpcs://ydb.example.com` is an encrypted data interchange protocol (gRPCs) with the server running on the ydb.example.com host on an isolated corporate network and listening for connections on YDB default port 2135.

* `grpcs://ydb.serverless.yandexcloud.net:2135` is an encrypted data interchange protocol (gRPCs), public {{ yandex-cloud }} Serverless YDB server at ydb.serverless.yandexcloud.net, port 2135.

-## Database path {#database}

+## Database Path {#database}

-Database path (`database`) is a string that defines where the queried database is located in the {{ ydb-short-name }} cluster. Has the [format](https://en.wikipedia.org/wiki/Path_(computing)) and uses the `/` character as separator. It always starts with a `/`.

+Database path (`database`) is a string that defines where the queried database is located in the {{ ydb-short-name }} cluster. It has the [format](https://en.wikipedia.org/wiki/Path_(computing)) and uses the `/` character as separator. It always starts with a `/`.

A {{ ydb-short-name }} cluster may have multiple databases deployed, with their paths determined by the cluster configuration. Like the endpoint, `database` for cloud databases is displayed in the management console on the desired database page, and can also be obtained via the CLI of the cloud provider.

@@ -35,7 +35,7 @@ Examples:

* `/ru-central1/b1g8skpblkos03malf3s/etn01q5ko6sh271beftr` is a {{ yandex-cloud }} database with `etn01q3ko8sh271beftr` as ID deployed in the `b1g8skpbljhs03malf3s` cloud in the `ru-central1` region.

* `/local` is the default database for custom deployment [using Docker](../quickstart.md).

-## Connection string {#connection_string}

+## Connection String {#connection_string}

A connection string is a URL-formatted string that specifies the endpoint and path to a database using the following syntax:

@@ -48,8 +48,8 @@ Examples:

Using a connection string is an alternative to specifying the endpoint and database path separately and can be used in tools that support this method.

-## A root certificate for TLS {#tls-cert}

+## A Root Certificate for TLS {#tls-cert}

When using an encrypted protocol ([gRPC over TLS](https://grpc.io/docs/guides/auth/), or gRPCS), a network connection can only be continued if the client is sure that it receives a response from the genuine server that it is trying to connect to, rather than someone in-between intercepting its request on the network. This is assured by verifications through a [chain of trust](https://en.wikipedia.org/wiki/Chain_of_trust), for which you need to install a root certificate on your client.

-The OS that the client runs on already include a set of root certificates from the world's major certification authorities. However, the {{ ydb-short-name }} cluster owner can use its own CA that is not associated with any of the global ones, which is often the case in corporate environments, and is almost always used for self-deployment of clusters with connection encryption support. In this case, the cluster owner must somehow transfer its root certificate for use on the client side. This certificate may be installed in the operating system's certificate store where the client runs (manually by a user or by a corporate OS administration team) or built into the client itself (as is the case for {{ yandex-cloud }} in {{ ydb-short-name }} CLI and SDK).

+The OS that the client runs on already includes a set of root certificates from the world's major certification authorities. However, the {{ ydb-short-name }} cluster owner can use its own CA that is not associated with any of the global ones, which is often the case in corporate environments, and is almost always used for self-deployment of clusters with connection encryption support. In this case, the cluster owner must somehow transfer its root certificate for use on the client side. This certificate may be installed in the operating system's certificate store where the client runs (manually by a user or by a corporate OS administration team) or built into the client itself (as is the case for {{ yandex-cloud }} in {{ ydb-short-name }} CLI and SDK).

diff --git a/ydb/docs/en/core/concepts/datamodel/_includes/object-naming-rules.md b/ydb/docs/en/core/concepts/datamodel/_includes/object-naming-rules.md

index c12dfd0333be..3f69df9711a7 100644

--- a/ydb/docs/en/core/concepts/datamodel/_includes/object-naming-rules.md

+++ b/ydb/docs/en/core/concepts/datamodel/_includes/object-naming-rules.md

@@ -1,24 +1,24 @@

-## Database object naming rules {#object-naming-rules}

+## Database Object Naming Rules {#object-naming-rules}

Every [scheme object](../../../concepts/glossary.md#scheme-object) in {{ ydb-short-name }} has a name. In YQL statements, object names are specified by identifiers that can be enclosed in backticks or not. For more information on identifiers, refer to [{#T}](../../../yql/reference/syntax/lexer.md#keywords-and-ids).

Scheme object names in {{ ydb-short-name }} must meet the following requirements:

- Object names can include the following characters:

- - uppercase latin characters

- - lowercase latin characters

- - digits

- - special characters: `.`, `-`, and `_`.

+ - Uppercase Latin characters

+ - Lowercase Latin characters

+ - Digits

+ - Special characters: `.`, `-`, and `_`

- Object name length must not exceed 255 characters.

-- Objects cannot be created in folders, which names start with a dot, such as `.sys`, `.medatata`, `.sys_health`.

+- Objects cannot be created in folders which names start with a dot, such as `.sys`, `.metadata`, and `.sys_health`.

-## Column naming rules {#column-naming-rules}

+## Column Naming Rules {#column-naming-rules}

Column names in {{ ydb-short-name }} must meet the following requirements:

- Column names can include the following characters:

- - uppercase latin characters

- - lowercase latin characters

- - digits

- - special characters: `-` and `_`.

+ - Uppercase Latin characters

+ - Lowercase Latin characters

+ - Digits

+ - Special characters: `-` and `_`

- Column names must not start with the system prefix `__ydb_`.

diff --git a/ydb/docs/en/core/concepts/datamodel/_includes/table.md b/ydb/docs/en/core/concepts/datamodel/_includes/table.md

index eb7c2cfaf769..6b97f606512f 100644

--- a/ydb/docs/en/core/concepts/datamodel/_includes/table.md

+++ b/ydb/docs/en/core/concepts/datamodel/_includes/table.md

@@ -17,23 +17,23 @@ CREATE TABLE article (

Please note that currently, the `NOT NULL` constraint can only be applied to columns that are part of the primary key.

-{{ ydb-short-name }} supports the creation of both row-oriented and column-oriented tables. The primary difference between them lies in their use-cases and how data is stored on the disk drive. In row-oriented tables, data is stored sequentially in the form of rows, while in column-oriented tables, data is stored in the form of columns. Each table type has its own specific purpose.

+{{ ydb-short-name }} supports the creation of both row-oriented and column-oriented tables. The primary difference between them lies in their use cases and how data is stored on the disk drive. In row-oriented tables, data is stored sequentially in the form of rows, while in column-oriented tables, data is stored in the form of columns. Each table type has its own specific purpose.

-## Row-oriented tables {#row-oriented-tables}

+## Row-Oriented Tables {#row-oriented-tables}

Row-oriented tables are well-suited for transactional queries generated by Online Transaction Processing (OLTP) systems, such as weather service backends or online stores. Row-oriented tables offer efficient access to a large number of columns simultaneously. Lookups in row-oriented tables are optimized due to the utilization of indexes.

An index is a data structure that improves the speed of data retrieval operations based on one or several columns. It's analogous to an index in a book: instead of scanning every page of the book to find a specific chapter, you can refer to the index at the back of the book and quickly navigate to the desired page.

-Searching using an index allows you to swiftly locate the required rows without scanning through all the data. For instance, if you have an index on the “author” column and you're looking for articles written by “Gray”, the DBMS leverages this index to quickly identify all rows associated with that surname.

+Searching using an index allows you to swiftly locate the required rows without scanning through all the data. For instance, if you have an index on the "author" column and you're looking for articles written by "Gray," the DBMS leverages this index to quickly identify all rows associated with that surname.

You can create a row-oriented table through the {{ ydb-short-name }} web interface, CLI, or SDK. Regardless of the method you choose to interact with {{ ydb-short-name }}, it's important to keep in mind the following rule: the table must have at least one primary key column, and it's permissible to create a table consisting solely of primary key columns.

By default, when creating a row-oriented table, all columns are optional and can have `NULL` values. This behavior can be modified by setting the `NOT NULL` conditions for key columns that are part of the primary key. Primary keys are unique, and row-oriented tables are always sorted by this key. This means that point reads by the key, as well as range queries by key or key prefix, are efficiently executed (essentially using an index). It's permissible to create a table consisting solely of key columns. When choosing a key, it's crucial to be careful, so we recommend reviewing the article: ["Choosing a Primary Key for Maximum Performance"](../../../dev/primary-key/row-oriented.md).

-### Partitioning row-oriented tables {#partitioning}

+### Partitioning Row-Oriented Tables {#partitioning}

-A row-oriented database table can be partitioned by primary key value ranges. Each partition of the table is responsible for the specific section of primary keys. Key ranges served by different partitions do not overlap. Different table partitions can be served by different cluster nodes (including ones in different locations). Partitions can also move independently between servers to enable rebalancing or ensure partition operability if servers or network equipment goes offline.

+A row-oriented database table can be partitioned by primary key value ranges. Each partition of the table is responsible for a specific section of primary keys. Key ranges served by different partitions do not overlap. Different table partitions can be served by different cluster nodes (including ones in different locations). Partitions can also move independently between servers to enable rebalancing or ensure partition operability if servers or network equipment goes offline.

If there is not a lot of data or load, the table may consist of a single shard. As the amount of data served by the shard or the load on the shard grows, {{ ydb-short-name }} automatically splits this shard into two shards. The data is split by the median value of the primary key if the shard size exceeds the threshold. If partitioning by load is used, the shard first collects a sample of the requested keys (that can be read, written, and deleted) and, based on this sample, selects a key for partitioning to evenly distribute the load across new shards. So in the case of load-based partitioning, the size of new shards may significantly vary.

@@ -41,7 +41,7 @@ The size-based shard split threshold and automatic splitting can be configured (

In addition to automatically splitting shards, you can create an empty table with a predefined number of shards. You can manually set the exact shard key split range or evenly split it into a predefined number of shards. In this case, ranges are created based on the first component of the primary key. You can set even splitting for tables that have a `Uint64` or `Uint32` integer as the first component of the primary key.

-Partitioning parameters refer to the table itself rather than to secondary indexes built on its data. Each index is served by its own set of shards and decisions to split or merge its partitions are made independently based on the default settings. These settings may become available to users in the future like the settings of the main table.

+Partitioning parameters refer to the table itself rather than to secondary indexes built on its data. Each index is served by its own set of shards, and decisions to split or merge its partitions are made independently based on the default settings. These settings may become available to users in the future like the settings of the main table.

A split or a merge usually takes about 500 milliseconds. During this time, the data involved in the operation becomes temporarily unavailable for reads and writes. Without raising it to the application level, special wrapper methods in the {{ ydb-short-name }} SDK make automatic retries when they discover that a shard is being split or merged. Please note that if the system is overloaded for some reason (for example, due to a general shortage of CPU or insufficient DB disk throughput), split and merge operations may take longer.

@@ -68,7 +68,7 @@ When choosing the minimum number of partitions, it makes sense to consider that

#### AUTO_PARTITIONING_PARTITION_SIZE_MB {#auto_partitioning_partition_size_mb}

* Type: `Uint64`.

-* Default value: `2000 MB` ( `2 GB` ).

+* Default value: `2000 MB` (`2 GB`).

The desired partition size threshold in megabytes. Recommended values range from `10 MB` to `2000 MB`. If this threshold is exceeded, a shard may split. This setting takes effect when the [`AUTO_PARTITIONING_BY_SIZE`](#auto_partitioning_by_size) mode is enabled.

@@ -86,7 +86,7 @@ Partitions are only merged if their actual number exceeds the value specified by

* Type: `Uint64`.

* Default value: `50`.

-Partitions are only split if their number doesn't exceed the value specified by this parameter. With any automatic partitioning mode enabled, we recommend that you set a meaningful value for this parameter and monitor when the actual number of partitions approaches this value, otherwise splitting of partitions will stop sooner or later under an increase in data or load, which will lead to a failure.

+Partitions are only split if their number doesn't exceed the value specified by this parameter. With any automatic partitioning mode enabled, we recommend that you set a meaningful value for this parameter and monitor when the actual number of partitions approaches this value; otherwise, splitting of partitions will stop sooner or later under an increase in data or load, which will lead to a failure.

#### UNIFORM_PARTITIONS {#uniform_partitions}

@@ -106,7 +106,7 @@ Boundary values of keys for initial table partitioning. It's a list of boundary

When automatic partitioning is enabled, make sure to set the correct value for [AUTO_PARTITIONING_MIN_PARTITIONS_COUNT](#auto_partitioning_min_partitions_count) to avoid merging all partitions into one immediately after creating the table.

-### Reading data from replicas {#read_only_replicas}

+### Reading Data from Replicas {#read_only_replicas}

When making queries in {{ ydb-short-name }}, the actual execution of a query to each shard is performed at a single point serving the distributed transaction protocol. By storing data in shared storage, you can run one or more shard followers without allocating additional storage space: the data is already stored in replicated format, and you can serve more than one reader (but there is still only one writer at any given moment).

@@ -128,9 +128,9 @@ The internal state of each of the followers is restored exactly and fully consis

Besides the data state in storage, followers also receive a stream of updates from the leader. Updates are sent in real time, immediately after the commit to the log. However, they are sent asynchronously, resulting in some delay (usually no more than dozens of milliseconds, but sometimes longer in the event of cluster connectivity issues) in applying updates to followers relative to their commit on the leader. Therefore, reading data from followers is only supported in the [transaction mode](../../transactions.md#modes) `StaleReadOnly()`.

If there are multiple followers, their delay from the leader may vary: although each follower of each of the shards retains internal consistency, artifacts may be observed from shard to shard. Please provide for this in your application code. For that same reason, it's currently impossible to perform cross-shard transactions from followers.

-### Deleting expired data (TTL) {#ttl}

+### Deleting Expired Data (TTL) {#ttl}

-{{ ydb-short-name }} supports automatic background deletion of expired data. A table data schema may define a column containing a `Datetime` or a `Timestamp` value. A comparison of this value with the current time for all rows will be performed in the background. Rows for which the current time becomes greater than the column value plus specified delay, will be deleted.

+{{ ydb-short-name }} supports automatic background deletion of expired data. A table data schema may define a column containing a `Datetime` or a `Timestamp` value. A comparison of this value with the current time for all rows will be performed in the background. Rows for which the current time becomes greater than the column value plus specified delay will be deleted.

| Parameter name | Type | Acceptable values | Update capability | Reset capability |

| ------------- | --- | ------------------- | --------------------- | ------------------ |

@@ -138,18 +138,18 @@ If there are multiple followers, their delay from the leader may vary: although

Syntax of TTL value is described in the article [{#T}](../../../yql/reference/syntax/create_table/with.md#time-to-live). For more information about deleting expired data, see [Time to Live (TTL)](../../../concepts/ttl.md).

-### Renaming a table {#rename}

+### Renaming a Table {#rename}

{{ ydb-short-name }} lets you rename an existing table, move it to another directory of the same database, or replace one table with another, deleting the data in the replaced table. Only the metadata of the table is changed by operations (for example, its path and name). The table data is neither moved nor overwritten.

-Operations are performed in isolation, the external process sees only two states of the table: before and after the operation. This is critical, for example, for table replacement: the data of the replaced table is deleted by the same transaction that renames the replacing table. During the replacement, there might be errors in queries to the replaced table that have [retryable statuses](../../../reference/ydb-sdk/error_handling.md#termination-statuses).

+Operations are performed in isolation; the external process sees only two states of the table: before and after the operation. This is critical, for example, for table replacement: the data of the replaced table is deleted by the same transaction that renames the replacing table. During the replacement, there might be errors in queries to the replaced table that have [retryable statuses](../../../reference/ydb-sdk/error_handling.md#termination-statuses).

The speed of renaming is determined by the type of data transactions currently running against the table and doesn't depend on the table size.

* [Renaming a table in YQL](../../../yql/reference/syntax/alter_table/rename.md)

* [Renaming a table via the CLI](../../../reference/ydb-cli/commands/tools/rename.md)

-### Bloom filter {#bloom-filter}

+### Bloom Filter {#bloom-filter}

Using a [Bloom filter](https://en.wikipedia.org/wiki/Bloom_filter) lets you more efficiently determine if some keys are missing in a table when making multiple point queries by primary key. This reduces the number of required disk I/O operations but increases the amount of memory consumed.

@@ -157,7 +157,7 @@ Using a [Bloom filter](https://en.wikipedia.org/wiki/Bloom_filter) lets you more

| ------------- | --- | ------------------- | --------------------- | ------------------ |

| `KEY_BLOOM_FILTER` | Enum | `ENABLED`, `DISABLED` | Yes | No |

-### Column groups {#column-groups}

+### Column Groups {#column-groups}

{{ ydb-short-name }} allows grouping columns in a table to optimize their storage and usage. The column group mechanism improves performance for partial row reads by separating table columns into multiple storage groups. The most commonly used scenario is the organization of storing rarely used attributes in a separate column group. Then you can enable data compression and/or store it on slower drives.

@@ -178,7 +178,7 @@ Accessing data in primary column group fields is faster and less resource-intens